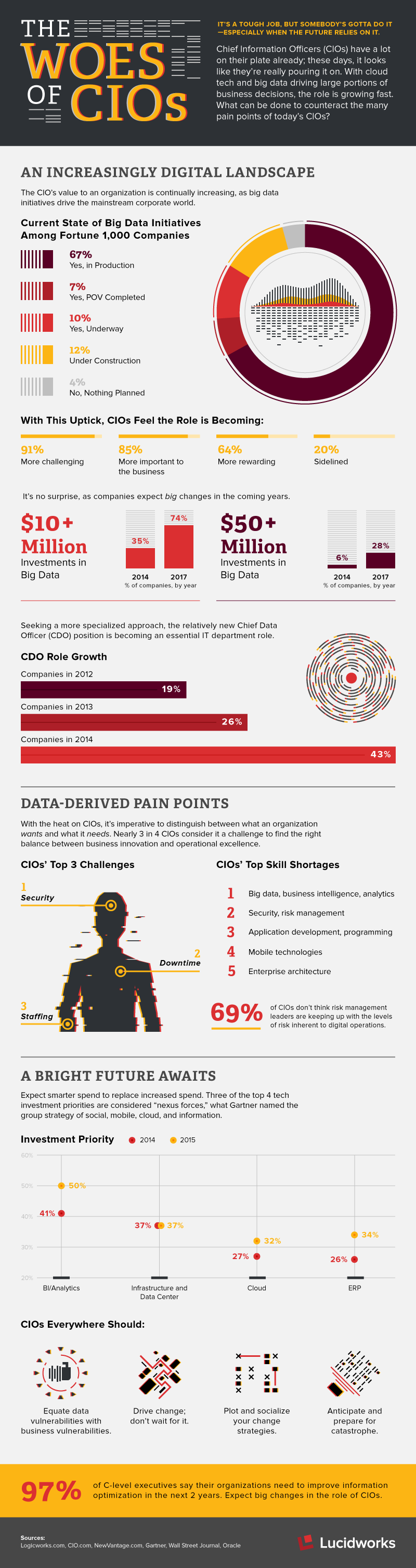

The post Infographic: The Woes of the CIOs appeared first on Lucidworks.

The post Infographic: The Woes of the CIOs appeared first on Lucidworks.

[

{

"id": "this-is-my-id",

"type": "slack",

"subject": "Slackity Slack",

"body": "This is a slack message that I am sending to the #bottestchannel",

"to": ["bottestchannel"],

"from": "bob"

}

]

An example SMTP message might look like:

[

{

"id": "foo",

"type": "smtp",

"subject": "Fusion Developer Position",

"body": "Hi, I’m interested in the engineering posting listed at http://lucidworks.com/company/careers/",

"to": ["careers@lucidworks.com"],

"from": "bob@bob.com",

"messageServiceParams":{

"smtp.username": "robert.robertson@bob.com",

"smtp.password": "XXXXXXXX"

}

}

]

Depending on how the Message Service template is set up will determine what aspects of the message are sent. For instance, in the screen grab above, the Slack message service is setup to post the subject and the body as <subject>: <body> to Slack, as set by the Message Template attribute of the service.

Tying this all together, we can send the actual message by POSTing the JSON above to the send endpoint, as shown in the screen grab from the Postman REST client plugin for Chrome:

| Message Attribute | Description |

| id | An application specific id for tracking the message. Must be unique. If you are not sure what to use, then generate a UUID. |

| type | The type of message to send. As of 1.4, may be: slack, smtp or log. Send a GET to http://HOST:PORT/api/apollo/messaging/ to get a list of supported types. |

| to | One or more destinations for the message, as a list. |

| from | Who/what the message is from. |

| subject | The subject of the message. |

| body | The main body of the message. |

| schedule | If the message should be sent at a later time or on a recurring basis, pass in the schedule object. See the Scheduler documentation for more information. |

| messageServiceParams | Pass in a map of any message service specific parameters. For instance, the SMTP Message Service requires the application to pass in the SMTP user and password. |

The post Alert! Alert! Alert! (Implementing Alerts in Lucidworks Fusion) appeared first on Lucidworks.

public interface StormTopologyFactory {

String getName();

StormTopology build(StreamingApp app) throws Exception;

}

Let’s look at a simple example of a StormTopologyFactory implementation that defines a topology for indexing tweets into Solr:

class TwitterToSolrTopology implements StormTopologyFactory {

static final Fields spoutFields = new Fields("id", "tweet")

String getName() { return "twitter-to-solr" }

StormTopology build(StreamingApp app) throws Exception {

// setup spout and bolts for accessing Spring-managed POJOs at runtime

SpringSpout twitterSpout =

new SpringSpout("twitterDataProvider", spoutFields);

SpringBolt solrBolt =

new SpringBolt("solrBoltAction", app.tickRate("solrBolt"));

// wire up the topology to read tweets and send to Solr

TopologyBuilder builder = new TopologyBuilder()

builder.setSpout("twitterSpout", twitterSpout,

app.parallelism("twitterSpout"))

builder.setBolt("solrBolt", solrBolt, app.parallelism("solrBolt"))

.shuffleGrouping("twitterSpout")

return builder.createTopology()

}

}

A couple of things should stand out to you in this listing. First, there’s no command-line parsing, environment-specific configuration handling, or any code related to running this topology. All that you see here is code defining a StormTopology; StreamingApp handles all the boring stuff for you. Second, the code is quite easy to understand because it only does one thing. Lastly, this class is written in Groovy instead of Java, which helps keep things nice and tidy and I find Groovy to be more enjoyable to write. Of course if you don’t want to use Groovy, you can use Java, as the framework supports both seamlessly.

The following diagram depicts the TwitterToSolrTopology. A key aspect of the solution is the use of the Spring framework to manage beans that implement application specific logic in your topology and leave the Storm boilerplate work to reusable components: SpringSpout and SpringBolt.

java -classpath $STORM_HOME/lib/*:target/storm-solr-1.0.jar com.lucidworks.storm.StreamingApp \ example.twitter.TwitterToSolrTopology -localRunSecs 90The command above will run the TwitterToSolrTopology for 90 seconds on your local workstation and then shutdown. All the setup work is provided by the StreamingApp class. To submit to a remote cluster, you would do:

$STORM_HOME/bin/storm jar target/storm-solr-1.0.jar com.lucidworks.storm.StreamingApp \ example.twitter.TwitterToSolrTopology -env stagingNotice that I’m using the -env flag to indicate I’m running in my staging environment. It’s common to need to run a Storm topology in different environments, such as test, staging, and production, so that’s built into the StreamingApp framework. So far, I’ve shown you how to define a topology and how to run it. Now let’s get into the details of how to implement components in a topology. Specifically, let’s see how to build a bolt that indexes data into Solr, as this illustrates many of the key features of the framework.

SpringBolt solrBolt = new SpringBolt("solrBoltAction", app.tickRate("solrBolt"));

This creates an instance of SpringBolt that delegates message processing to a Spring-managed bean with ID “solrBoltAction”.

The main benefit of the SpringBolt is it allows us to separate Storm-specific logic and boilerplate code from application logic. The com.lucidworks.storm.spring.SpringBolt class allows you to implement your bolt logic as a simple Spring-managed POJO (Plain Old Java Object). To leverage SpringBolt, you simply need to implement the StreamingDataAction interface:

public interface StreamingDataAction {

SpringBolt.ExecuteResult execute(Tuple input, OutputCollector collector);

}

At runtime, Storm will create one or more instances of SpringBolt per JVM. The number of instances created depends on the parallelism hint configured for the bolt. In the Twitter example, we simply pulled the number of tasks for the Solr bolt from our configuration:

// wire up the topology to read tweets and send to Solr

...

builder.setBolt("solrBolt", solrBolt, app.parallelism("solrBolt"))

...

The SpringBolt needs a reference to the solrBoltAction bean from the Spring ApplicationContext. The solrBoltAction bean is defined in resources/storm-solr-spring.xml as:

<bean id="solrBoltAction"

class="com.lucidworks.storm.solr.SolrBoltAction"

scope="prototype">

<property name="solrInputDocumentMapper" ref="solrInputDocumentMapper"/>

<property name="maxBufferSize" value="${maxBufferSize}"/>

<property name="bufferTimeoutMs" value="${bufferTimeoutMs}"/>

</bean>

There are a couple of interesting aspects of about this bean definition. First, the bean is defined with prototype scope, which means that Spring will create a new instance for each SpringBolt instance that Storm creates at runtime. This is important because it means your bean instance will only be accessed by one thread at a time so you don’t need to worry about thread-safety issues. Also notice that the maxBufferSize and bufferTimeoutMs properties are set using Spring’s dynamic variable resolution syntax, e.g. ${maxBufferSize}. These properties will be resolved during bean construction from a configuration file called resources/Config.groovy.

When the SpringBolt needs a reference to solrBoltAction bean, it first needs to get the Spring ApplicationContext. The StreamingApp class is responsible for bootstrapping the Spring ApplicationContext using storm-solr-spring.xml. StreamingApp ensures there is only one Spring context initialized per JVM instance per topology as multiple topologies may be running in the same JVM.

If you’re concerned about the Spring container being too heavyweight, rest assured there is only one container initialized per JVM per topology and bolts and spouts are long-lived objects that only need to be initialized once by Storm per task. Put simply, the overhead of Spring is quite minimal especially for long-running streaming applications.

The framework also provides a SpringSpout that allows you to implement a data provider as a simple Spring-managed POJO. I’ll refer you to the source code for more details about SpringSpout but it basically follows the same design patterns as SpringBolt.

@Metric public Timer sendBatchToSolr;The SolrBoltAction class provides several examples of how to use metrics in your bean implementations. At this point you should have a basic understanding of the main features of the framework. Now let’s turn our attention to some Solr-specific features.

<bean id="solrJsonBoltAction"

class="com.lucidworks.storm.solr.SolrJsonBoltAction"

scope="prototype">

<property name="split" value="/"/>

<property name="fieldMappings">

<list>

<value>$FQN:/**</value>

</list>

</property>

</bean>

The post Integrating Storm and Solr appeared first on Lucidworks.

Following in the footsteps of the percentile support added to Solr’s StatsComponent in 5.1, Solr 5.2 will add efficient set cardinality using the HyperLogLog algorithm.

Like most of the existing stat component options, cardinality of a field (or function values) can be requested using a simple local param option with a true value. For example…

$ curl 'http://localhost:8983/solr/techproducts/query?rows=0&q=*:*&stats=true&stats.field=%7B!count=true+cardinality=true%7Dmanu_id_s'

{

"responseHeader":{

"status":0,

"QTime":3,

"params":{

"stats.field":"{!count=true cardinality=true}manu_id_s",

"stats":"true",

"q":"*:*",

"rows":"0"}},

"response":{"numFound":32,"start":0,"docs":[]

},

"stats":{

"stats_fields":{

"manu_id_s":{

"count":18,

"cardinality":14}}}}

Here we see that in the techproduct sample data, the 32 (numFound) documents contain 18 (count) total values in the manu_id_s field — and of those 14 (cardinality) are unique.

And of course, like all stats, this can be combined with pivot facets to find things like the number of unique manufacturers per category…

$ curl 'http://localhost:8983/solr/techproducts/query?rows=0&q=*:*&facet=true&stats=true&facet.pivot=%7B!stats=s%7Dcat&stats.field=%7B!tag=s+cardinality=true%7Dmanu_id_s'

{

"responseHeader":{

"status":0,

"QTime":4,

"params":{

"facet":"true",

"stats.field":"{!tag=s cardinality=true}manu_id_s",

"stats":"true",

"q":"*:*",

"facet.pivot":"{!stats=s}cat",

"rows":"0"}},

"response":{"numFound":32,"start":0,"docs":[]

},

"facet_counts":{

"facet_queries":{},

"facet_fields":{},

"facet_dates":{},

"facet_ranges":{},

"facet_intervals":{},

"facet_heatmaps":{},

"facet_pivot":{

"cat":[{

"field":"cat",

"value":"electronics",

"count":12,

"stats":{

"stats_fields":{

"manu_id_s":{

"cardinality":8}}}},

{

"field":"cat",

"value":"currency",

"count":4,

"stats":{

"stats_fields":{

"manu_id_s":{

"cardinality":4}}}},

{

"field":"cat",

"value":"memory",

"count":3,

"stats":{

"stats_fields":{

"manu_id_s":{

"cardinality":1}}}},

{

"field":"cat",

"value":"connector",

"count":2,

"stats":{

"stats_fields":{

"manu_id_s":{

"cardinality":1}}}},

...

cardinality=true vs countDistinct=true

Astute readers may ask: “Hasn’t Solr always supported cardinality using the stats.calcdistinct=true option?” The answer to that question is: sort of.

The calcdistinct option has never been recommended for anything other then trivial use cases because it used a very naive implementation of computing set cardinality — namely: it built in memory (and returned to the client) a full set of all the distinctValues. This performs fine for small sets, but as the cardinality increases, it becomes trivial to crash a server with an OutOfMemoryError with only a handful of concurrent users. In a distributed search, the behavior is even worse (and much slower) since all of those full sets on each shard must be sent over the wire to the coordinating node to be merged.

Solr 5.2 improves things slightly by splitting the calcdistinct=true option in two and letting clients request countDistinct=true independently from the set of all distinctValues=true. Under the covers Solr is still doing the same amount of work (and in distributed requests, the nodes are still exchanging the same amount of data) but asking only for countDistinct=true spares the clients from having to receive the full set of all values.

How the new cardinality option differs, is that it uses probabilistic “HyperLogLog” (HLL) algorithm to estimate the cardinality of the sets in a fixed amount of memory. Wikipedia explains the details far better then I could, but the key points Solr users should be aware of are:

countDistinct=true (assuming no hash collisions)

The examples we’ve seen so far used cardinality=true as a local param — this is actually just syntactic sugar for cardinality=0.33. Any number between 0.0 and 1.0 (inclusively) can be specified to indicate how the user would like to trade off RAM vs accuracy:

cardinality=0.0 — “Use the minimum amount of ram supported to give me a very rough approximate value”cardinality=1.0 — “Use the maximum amount of ram supported to give me the most accurate approximate value possible”

Internally these floating point values, along with some basic heuristics about the Solr field type (ie: 32bit field types like int and float have a much smaller max-possible cardinality then fields like long, double, strings, etc…) are used to tune the “log2m” and “regwidth” options of the underlying java-hll implementation. Advanced Solr users can provide explicit values for these options using the hllLog2m and hllRegwidth localparams, see the

StatsComponent documentation for more details.

To help showcase the trade offs between using the old countDistinct logic, and the new HLL based cardinality option, I setup a simple benchmark to help compare them.

The initial setup is fairly straight forward:

bin/solr -e cloud -noprompt to setup a 2 node cluster containing 1 collection with 2 shards and 2 replicaslong_data_ls: A multivalued numeric field containing 3 random “long” valuesstring_data_ss: A multivalued string field containing the same 3 values (as strings)Note that because we generated 3 random values in each field for each documents, we expect the cardinality results of each query to be ~3x the number of documents matched by that query. (Some minor variations may exist if multiple documents just so happened to contain the same randomly generated field values).

With this pre-built index, and this set of pre-generated random queries, we can then execute the query set

over and over again with different options to compute the cardinality. Specifically, for both of our test fields, the following stats.field variants were tested:

{!key=k countDistinct=true}{!key=k cardinality=true} (the same as 0.33){!key=k cardinality=0.5}{!key=k cardinality=0.7}

For each field, and each stats.field, 3 runs of the full query set were executed sequentially using a single client thread, and both the resulting cardinality as well as the mean+stddev of the response time (as observed by the client) were recorded.

Looking at graphs of the raw numbers returned by each approach isn’t very helpful, it basically just looks like a perfectly straight line with a slope of 1 — which is good. A Straight line means we got the answers we expect.

But the devil is in the details. What we really need to look at in order to meaningfully compare the measured accuracy of the different approaches is the “relative error“. As we can see in this graph below, the most accurate results clearly come from using countDistinct=true. After that cardinality=0.7 is a very close second, and the measured accuracy gets worse as the tuning value for the cardinality option gets lower.

Looking at these results, you may wonder: Why bother using the new cardinality option at all?

To answer that question, let’s look at the next 2 graphs. The first shows the mean request time (as measured from the query client) as the number of values expected in the set grows. There is a lot of noise in this graph at the low values due to poor warming queries on my part in the testing process — so the second graph shows a cropped view of the same data

Here we start to see some obvious advantage in using the cardinality option. While the countDistinct response times continue to grow and get more and more unpredictable — largely because of extensive garbage collection — the cost (in processing time) of using the cardinality option practically levels off. So it becomes fairly clear that if you can accept a small bit of approximation in your set cardinality statistics, you can gain a lot of confidence and predictability in the behavior of your queries. And by tuning the cardinality parameter, you can trade off accuracy for the amount of RAM used at query time, with relatively minor impacts on response time performance.

If we look at the results for the string field we can see that while the accuracy results are virtually identical to, and the request time performance of the cardinality option is consistent with, that of the numeric fields (due to hashing) the request time performance of countDistinct completely falls apart — even though these are relatively small string values….

I would certainly never recommend anyone use countDistinct with non trivial string fields.

There are still several things about the HLL implementation that could be be made “user tunable” with a few more request time knobs/dials once users get a chance to try out and experiment with this new feature and give feedback — but I think the biggest bang for the buck will be to add index time hashing support — which should help a lot in speeding up the response times of cardinality computations using the classic trade off: do more work at index time, and make your on disk index a bit larger, to save CPU cycles at query time and reduce query response time.

The post Efficient Field Value Cardinality Stats in Solr 5.2: HyperLogLog appeared first on Lucidworks.

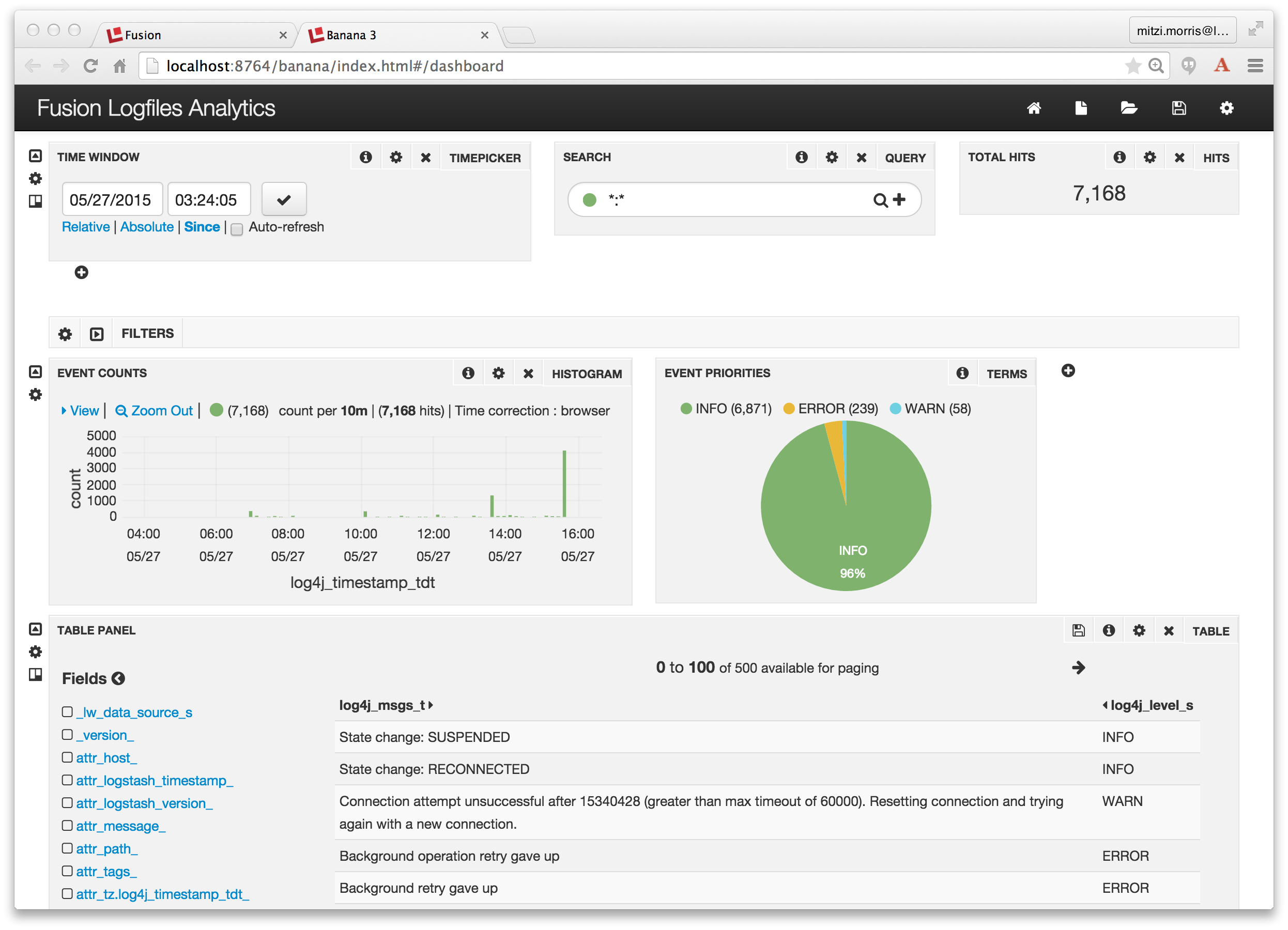

Lucidworks Fusion 1.4 now ships with plugins for Logstash. In my previous post on Log Analytics with Fusion, I showed how Fusion Dashboards provide interactive visualization over time-series data, using a small CSV file of cleansed server-log data. Today, I use Logstash to analyze Fusion’s logfiles – real live messy data!

Logstash is an open-source log management tool. Logstash takes inputs from one or more logfiles, parses and filters them according to a set of configurations, and outputs a stream of JSON objects where each object corresponds to a log event. Fusion 1.4 ships with a Logstash deployment plus a custom ruby class lucidworks_pipeline_output.rb which collects Logstash outputs and sends them to Solr for indexing into a Fusion collection.

Logstash filters can be used to normalize time and date formats, providing a unified view of a sequence of user actions which span multiple logfiles. For example, in an ecommerce application, where user search queries are recorded by the search server using one format and user browsing actions are recorded by the web server in a different format, Logstash provides a way of normalizing and unifying this information into a clearer picture of user behavior. Date and timestamp formats are some of the major pain points of text processing. Fusion provides custom date formatting, because your dates are a key part of your data. For log analytics, when visualizations include a timeline, timestamps are the key data.

In order to map Logstash records into Solr fielded documents you need to have a working Logstash configuration script that runs over your logfiles. If you’re new to Logstash, don’t panic! I’ll show you how to write a Logstash configuration script and then use it to index Fusion’s own logfiles. All you need is a running instance of Fusion 1.4 and you can try this at home, so if you haven’t done so already, download and install Fusion. Detailed instructions are in the online Fusion documentation: Installing Lucidworks Fusion.

Fusion Components and their Logfiles

What do Fusion’s log files look like? Fusion integrates many open-source and proprietary tools into a fault-tolerant, flexible, and highly scalable search and indexing system. A Fusion deployment consists of the following components:

Each of these components runs as a process in its own JVM. This allows for distributing and replicating services across servers for performance and scalability. On startup, Fusion reports on its components and the ports that they are listening on. For a local single-server installation with the default configuration, the output is similar to this:

2015-04-10 12:26:44Z Starting Fusion Solr on port 8983 2015-04-10 12:27:14Z Starting Fusion API Services on port 8765 2015-04-10 12:27:19Z Starting Fusion UI on port 8764 2015-04-10 12:27:25Z Starting Fusion Connectors on port 8984

All Fusion services use the Apache log4j logging utility. For the default Fusion deployment, the log4j directives are found in files: $FUSION/jetty/{service}/resources/log4j2.xml and logging outputs are written to files: $FUSION/logs/{service}/{service}.log, where service is either “api”, “connectors”, “solr”, or “ui” and $FUSION is shorthand for the full path to the top-level directory of the Fusion archive, e.g., if you’ve unpacked the Fusion download in /opt/lucidworks, $FUSION refers to directory /opt/lucidworks/fusion, a.k.a. the Fusion home directory. The default deployment for each of these components has most logfile message levels set at “$INFO”, resulting in lots of logfile messages. On startup, Fusion send a couple hundred messages to these logfiles. By the time you’ve configured your collection, datasource, and pipeline, you’ll have plenty of data to work with!

Data Design: Logfile Patterns

First I need to do some preliminary data analysis and data design. What logfile data do I want to extract and analyze?

All the log4j2.xml configuration files for the Fusion services use the same Log4j pattern layout:

<PatternLayout>

<pattern>%d{ISO8601} - %-5p [%t:%C{1}@%L] - %m%n</pattern>

</PatternLayout>

In the Log4j syntax, the percent sign is followed by a conversion specifier (think c-style printf). In this pattern, the conversion specifiers used are:

Here’s what what the resulting log file messages looks like:

2015-05-21T11:30:59,979 - INFO [scheduled-task-pool-2:MetricSchedulesRegistrar@70] - Metrics indexing will be enabled 2015-05-21T11:31:00,198 - INFO [qtp1990213994-17:SearchClusterComponent$SolrServerLoader@368] - Solr version 4.10.4, using JavaBin protocol 2015-05-21T11:31:00,300 - INFO [solr-flush-0:SolrZkClient@210] - Using default ZkCredentialsProvider

To start with, I want to capture the timestamp, the priority of the logging event, and the application supplied message. The timestamp should be stored in a Solr TrieDateField, the event priority is a set of strings used for faceting, and the application supplied message should be stored as searchable text. Thus, in my Solr index, I want fields:

Note that these field names all have suffixes which encode the field type: the suffix “_tdt” is used for Solr TrieDateFields, the suffix “_s” is used for Solr string fields, and the suffix “_t” is used for Solr text fields.

A grok filter is applied to input line(s) from a logfile and outputs a Logstash event which is a list of field-value pairs produced by matches against a grok pattern. A grok pattern is specified as: %{SYNTAX:SEMANTIC}, where SYNTAX is the pattern to match against, SEMANTIC is the field name in the Logstash event. The Logstash grok filter used for this example is:

%{TIMESTAMP_ISO8601:log4j_timestamp_tdt} *-* %{LOGLEVEL:log4j_level_s}\s+*[*\S+*]* *-* %{GREEDYDATA:log4j_msgs_t}

This filter uses three grok patterns to match the log4j layout:

TIMESTAMP_ISO8601 matches the log4j timestamp pattern %d{ISO8601}.

LOGLEVEL matches the Priority pattern %p.

GREEDYDATA pattern can be used to match everything left on the line.

I used the Grok Constructor tool to develop and test my log4j grok filter. I highly recommend this tool and associated website to all Logstash noobs – I learned alot!

To skip over the [thread:class@line] - information, I’m using the regex "*[*\S+*]* *-*". Here the asterisks act like single quotes to escape characters which otherwise would be syntactically meaningful. This regex fails to match class names which contain whitespace, but it fails conservatively, so the final greedy pattern will still capture the entire application output message.

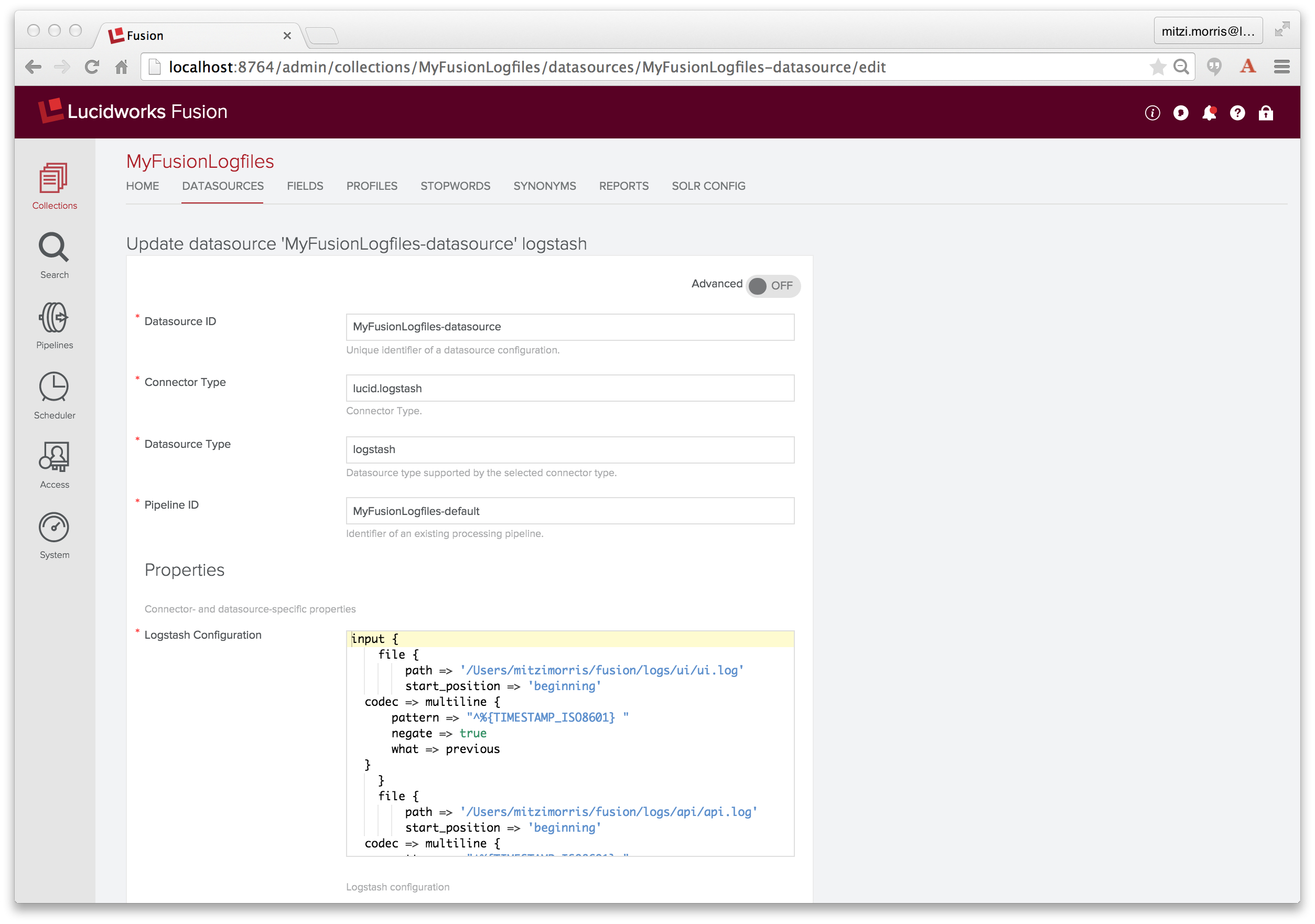

To apply this filter to my Fusion logfiles, the complete Logstash script is:

input {

file {

path => '/Users/mitzimorris/fusion/logs/ui/ui.log'

start_position => 'beginning'

codec => multiline {

pattern => "^%{TIMESTAMP_ISO8601} "

negate => true

what => previous

}

}

file {

path => '/Users/mitzimorris/fusion/logs/api/api.log'

start_position => 'beginning'

codec => multiline {

pattern => "^%{TIMESTAMP_ISO8601} "

negate => true

what => previous

}

}

file {

path => '/Users/mitzimorris/fusion/logs/connectors/connectors.log'

start_position => 'beginning'

codec => multiline {

pattern => "^%{TIMESTAMP_ISO8601} "

negate => true

what => previous

}

}

}

filter {

grok {

match => { 'message' => '%{TIMESTAMP_ISO8601:log4j_timestamp_tdt} *-* %{LOGLEVEL:log4j_level_s}\s+*[*\S+*]* *-* %{GREEDYDATA:log4j_msgs_t}' }

}

}

output {

}

This script specifies the set of logfiles to monitor. Since logfile messages may span multiple lines, for each logfile I use a Logstash codec, for multiline files, following the Logstash docs example. This codec is the same for all files but must be applied to each input file in order to avoid interleaving lines from different logfiles. The actual work is done in the filter clause, by the grok filter discussed above.

Indexing Logfiles with Fusion and Logstash

Using Fusion to index the Fusion logfiles requires the following:

A Fusion collection is a Solr collection plus a set of Fusion components. The Solr collection holds all of your data. The Fusion components include a pair of Fusion pipelines: one for indexing, one for search queries.

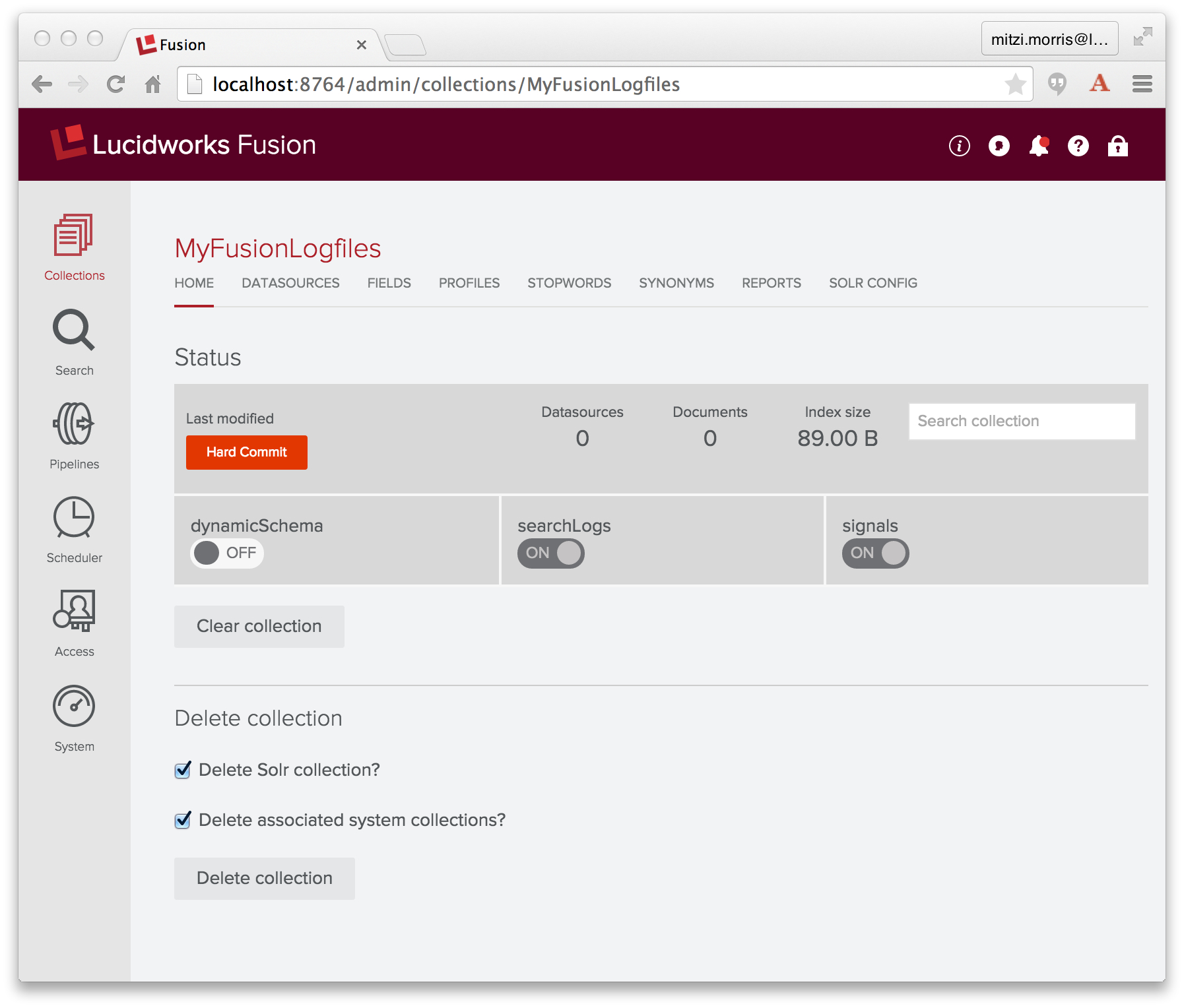

I create a collection named “MyFusionLogfiles” using the Fusion UI Admin Tool. Fusion creates an indexing pipeline called “MyFusionLogfiles-default”, as well as a query pipeline with the same name. Here is the initial collection:

Fusion calls Logstash using a Datasource configured to connect to Logstash.

Datasources store information about how to ingest data and they manage the ongoing flow of data into your application: the details of the data repository, how to access the repository, how to send raw data to a Fusion pipeline for Solr indexing, and the Fusion collection that contains the resulting Solr index. Fusion also records each time a datasource job is run and records the number of documents processed.

Datasources are managed by the Fusion UI Admin Tool or by direct calls to the REST-API. The Admin Tool provides a home page for each collection as well as datasources panel. For the collection named “MyFusionLogfiles” the URL of the collection home page is: http://<server>:<port>/admin/collections/MyFusionLogfiles and the URL of the datasources panel is http://<server>:<port>/admin/collections/MyFusionLogfiles/datasources.

To create a Logstash datasource, I choose “Logging” datasource of type “Logstash”. In the configuration panel I name the datasource “MyFusionLogfiles-datasource”, specify “MyFusionLogfiles-default” as the index pipeline to use, and copy the Logstash script into the Logstash configuration input box (which is a JavaScript enabled text input box).

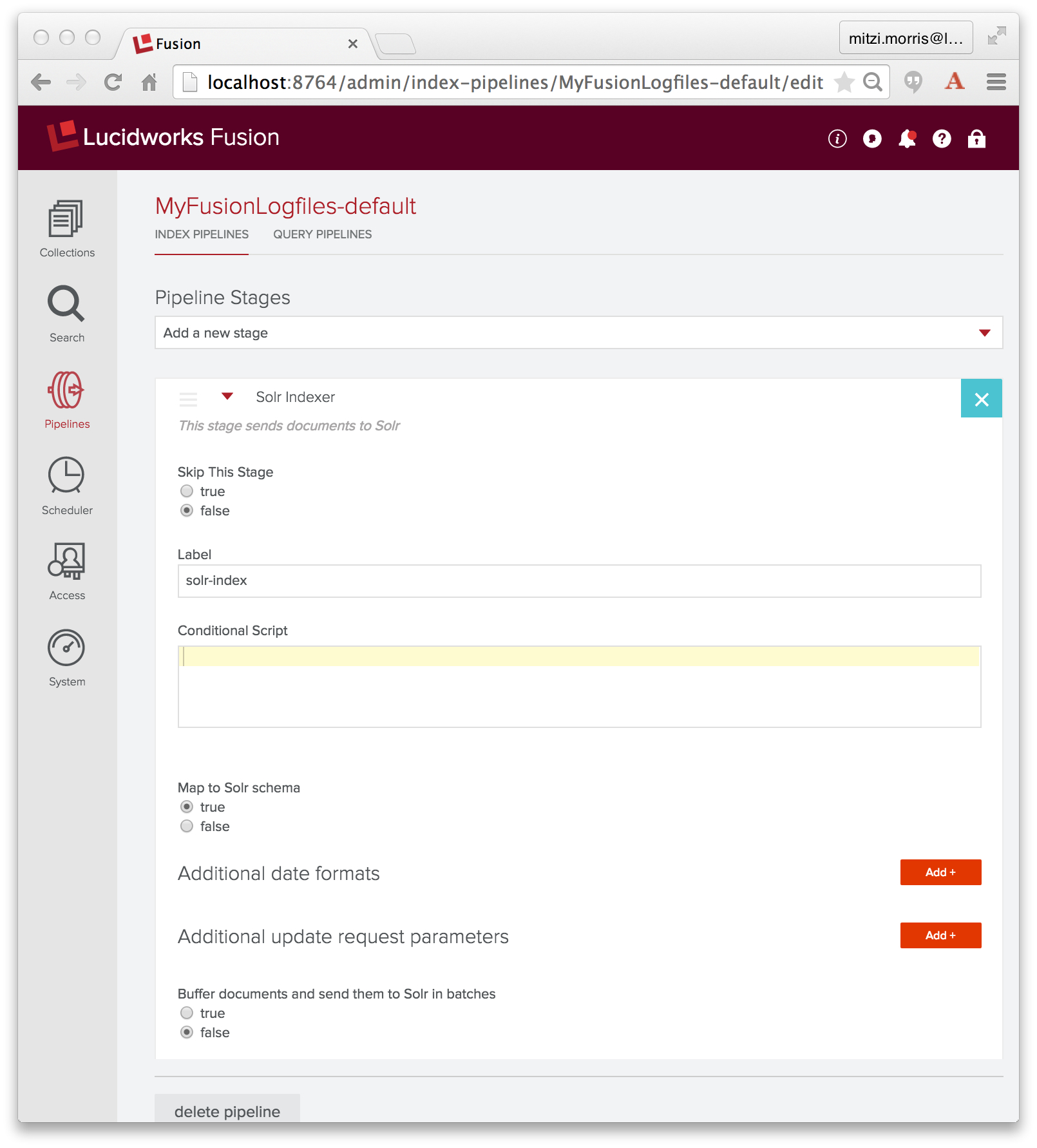

An Index pipeline transforms Logstash records into fielded documents for indexing by Solr. Fusion pipelines are composed of a sequence of one or more stages, where the inputs to one stage are the outputs from the previous stage. Fusion stages operate on PipelineDocument objects which organize the data submitted to the pipeline into a list of named field-value pairs, (discussed in a previous blog post). All PipelineDocument field values are strings. The inputs to the initial index pipeline stage are the output from the connector. The final stage is a Solr Indexer stage which sends its output to Solr for indexing into a Fusion collection.

In configuring the above datasource, I specified index pipeline “MyFusionLogfiles-default”, the index pipeline created in tandem with collection “MyFusionLogfiles”, which, as initially created, consists of a Solr Indexer stage:

The job of the Solr Indexer stage is to transform the PipelineDocument into a Solr document. Solr provides a rich set of datatypes, including datetime and numeric types. By default, a Solr Indexer stage is configured with property “enforceSchema” set to true, so that for each field in the PipelineDocument, the Solr Indexer stage checks the field name to see whether it is a valid field name for the collection’s Solr schema and whether or not the field contents can be converted into a valid instance of the Solr field’s defined datatype. If the field name is unknown or the PipelineDocument field is recognized as a Solr numeric or datetime field, but the field value cannot be converted to the proper type, then the Solr indexer stage transforms the field name so that the field contents are added to the collection as a text data field. This means that all of your data will be indexed into Solr, but the Solr document might not have the set of fields that you expect, instead your data will be in a field with an automatically generated field name, indexed as text.

Note that above, I carefully specified the grok filter so that the field names encode the field types: field “log4j_timestamp_tdt” is a Solr TrieDateField, field “log4j_level_s” is a Solr string field, and field “log4j_message_t” is a Solr text field.

Spoiler alert: this won’t work. Stay tuned for the fail and the fix.

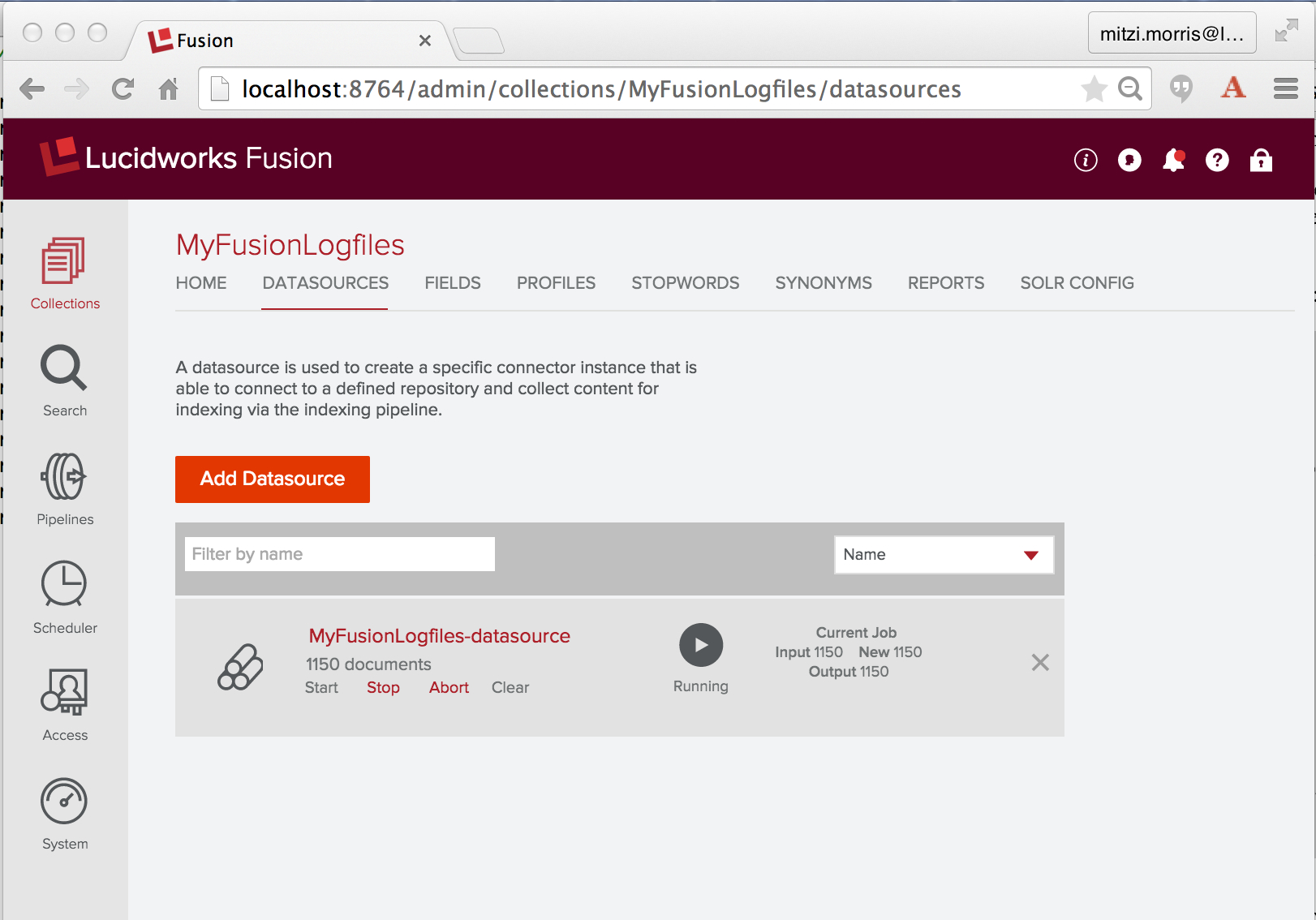

To ingest and index the Logstash data, I run the configured datasource using the controls displayed underneath the datasource name. Here is a screenshot of the “Datasources” panel of the the Fusion UI Admin Tool while the Logstash datasource “MyFusionLogfiles-datasource” is running:

Once started, a Logstash connector will continue to run indefinitely. Since I’m using Fusion to index its own logfiles, including the connectors logfile, running this datasource will continue to generate new logfile entries to index. Before starting this job, I do a quick count on the number of logfile entries across the three logfiles I’m going to index:

> grep "^2015" api/api.log connectors/connectors.log ui/ui.log | wc -l 1537

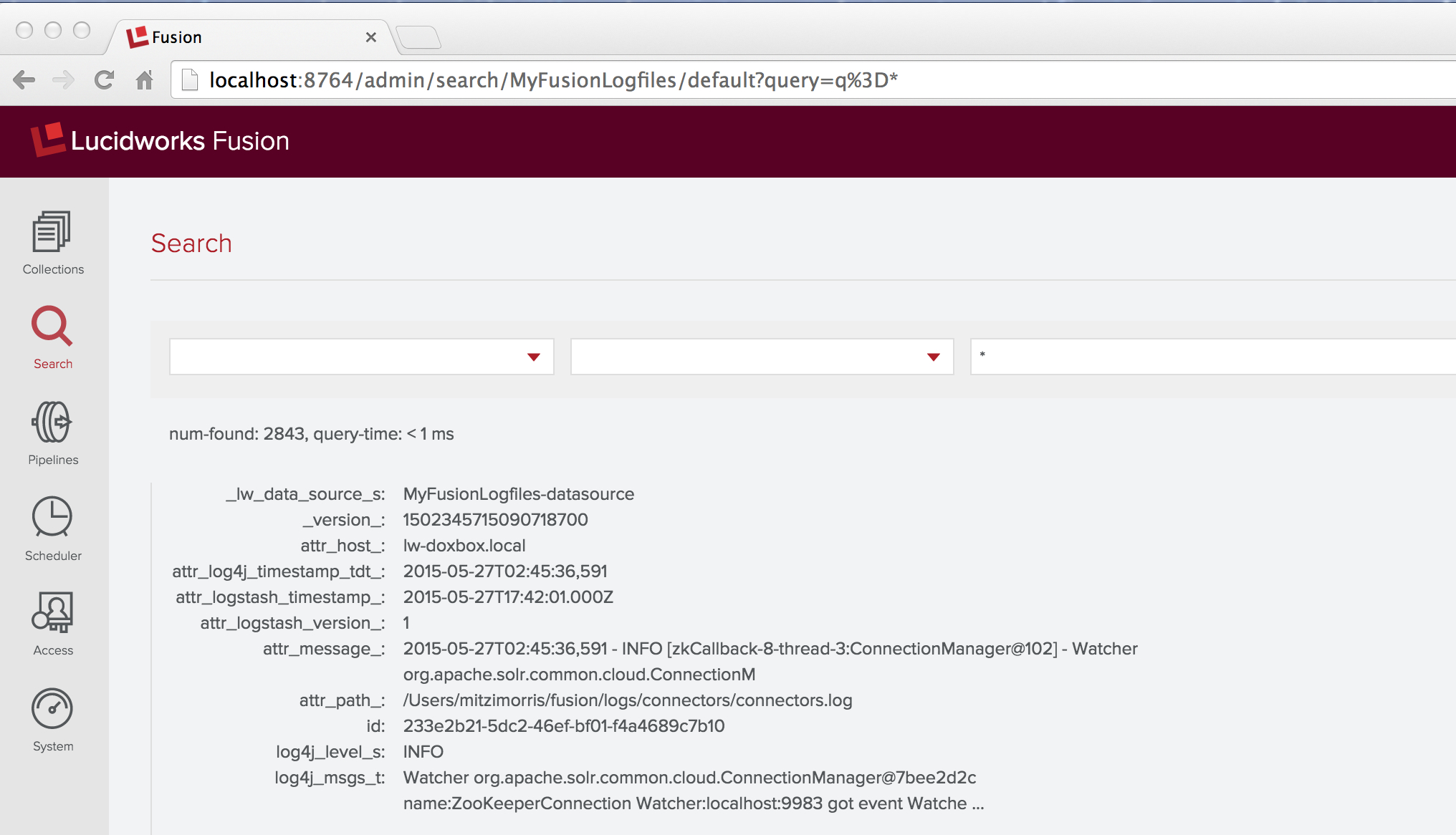

After a few minutes, I’ve indexed over 2000 documents, so I click on the “stop” control. Then I go back to the “home” panel and check my work by running a wildcard search (“*”) over the collection “MyFusionLogfiles”. The first result looks like this:

This result contains the fail that I promised. The raw logfile message was:

2015-05-27T02:45:36,591 - INFO [zkCallback-8-thread-3:ConnectionManager@102] - Watcher org.apache.solr.common.cloud.ConnectionManager@7bee2d2c name:ZooKeeperConnection Watcher:localhost:9983 got event WatchedEvent state:Disconnected type:None path:null path:null type:None

The document contains fields named “log4j_level_s” and “log4j_msgs_t”, but there’s no field named “log4j_timestamp_tdt” – instead there’s a field “attr_log4j_timestamp_tdt_” with value “2015-05-27T02:45:36,591″. This is the work of the Solr indexer stage, which renamed this field by adding the prefix “attr_” as well as a suffix “_”. The Solr schemas for Fusion collections have a dynamic field definition:

<dynamicField name="attr_*" type="text_general" indexed="true" stored="true" multiValued="true"/>

This explains the specifics of the resulting field name and field type, but it doesn’t explain why this remapping was necessary.

Why isn’t the Log4j timestamp “2015-05-27T02:45:36,591″ a valid timestamp for Solr? The answer is that the Solr date format is:

yyyy-MM-dd'T'HH:mm:ss.SSS'Z'

‘Z’ is the special Coordinated Universal Time (UTC) designator. Because Lucene/Solr range queries over timestamps require timestamps to be strictly comparable, all date information must be expressed in UTC. The Log4j timestamp presents two problems:

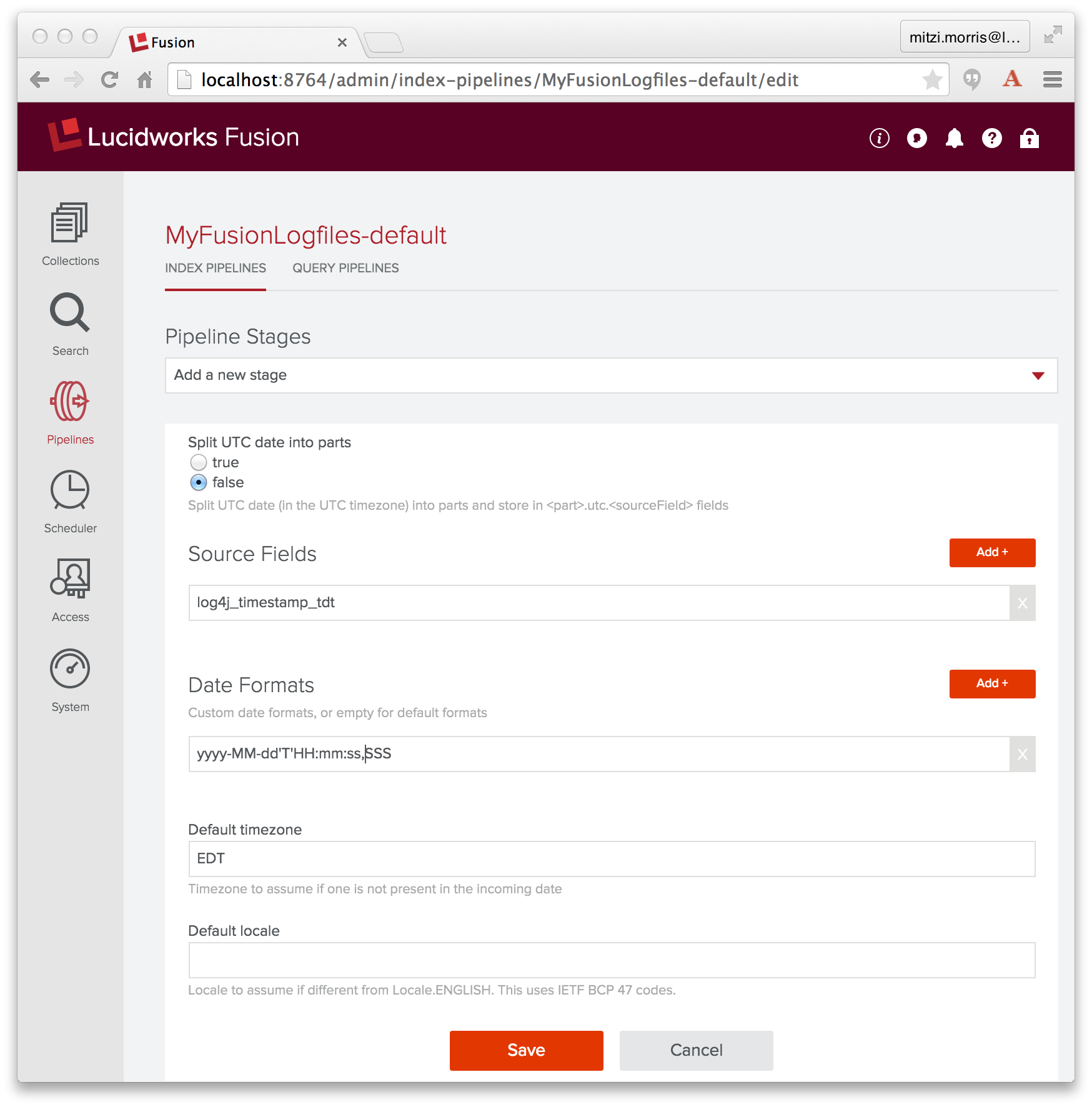

Because date/time data is a key datatype, Fusion provides a Date Parsing index stage, which parses and normalizes date/time data in document fields. Therefore, to fix this problem, I add a Date Parsing stage to index pipeline “MyFusionLogfiles-default”, where I specify the source field as “log4j_timestamp_tdt”, the date format as “yyyy-MM-dd’T’HH:mm:ss,SSS”, and the timezone as “EDT”:

This stage must come before the Solr Indexer stage. In the Fusion UI, adding a new stage to a pipeline puts it at the end of the pipeline, so I need to reorder the pipeline by dragging the Date Parsing stage so that it precedes the Solr Indexer stage.

Once this change is in place, I clear both the collection and the datasource and re-run the indexing job. An annoying detail for the Logstash connector is that in order to clear the datasource, I need to track down the Logstash “since_db” files on disk, which track the last line read in each of the Logstash input files, (known issue, CONN-881). On my machine, I find a trio of hidden files in my home directory, with names that start with “.sincedb_” and followed by ids like “1e63ae1742505a80b50f4a122e1e0810″, and delete them.

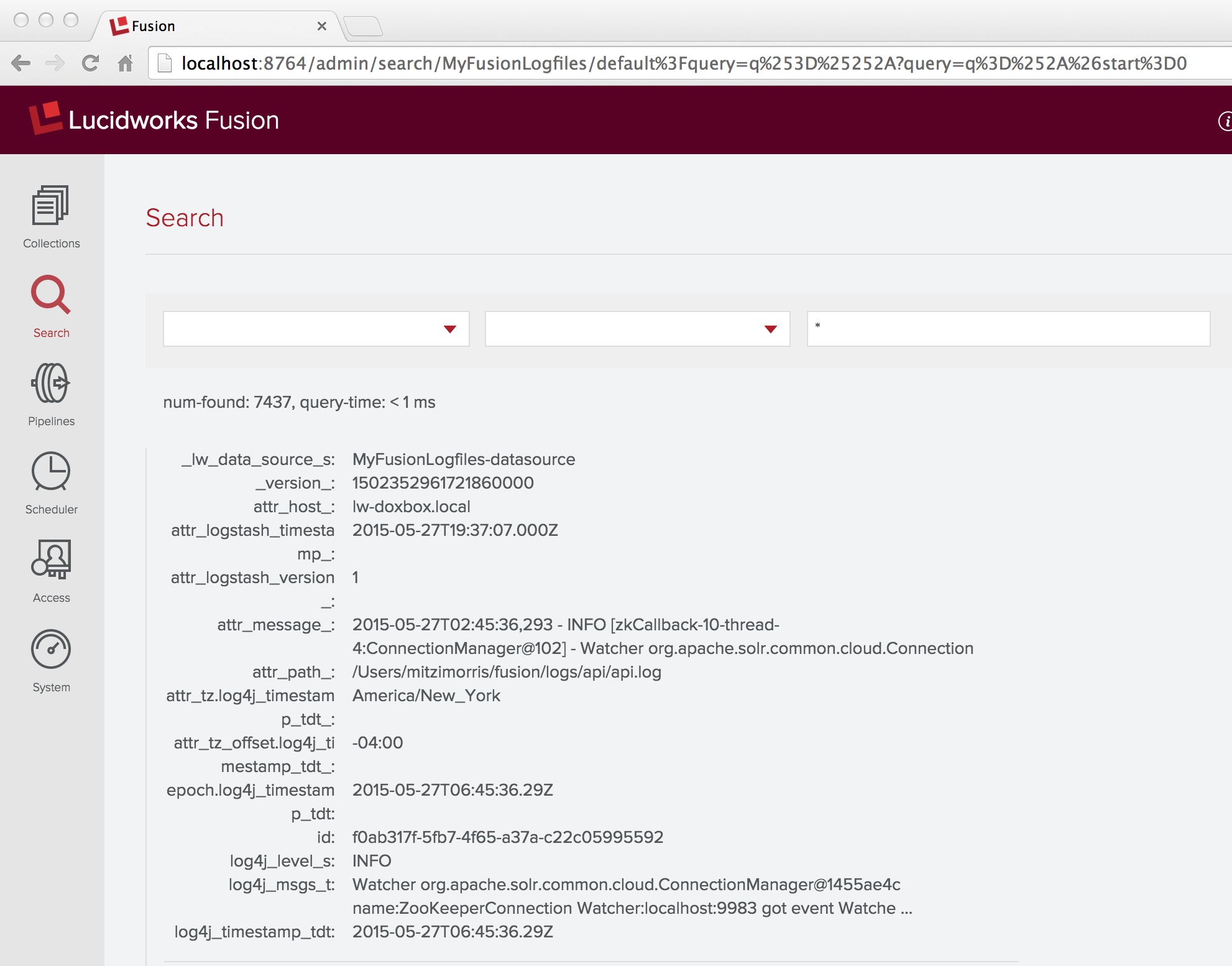

Problem solved! A wildcard search over all documents in collection “MyFusionLogfiles” shows that the set of document fields includes “log4j_timestamp_tdt” field, along with several fields added by the Date Parsing index stage:

More than 90% of data analytics is data munging, because the data never fits together quite as cleanly as it ought to.

Once you accept this fact, you will appreciate the power and flexibility of Fusion Pipeline index stages and the power and convenience of the tools on the Fusion UI.

Fusion Log Analytics with Fusion

Fusion Dashboards provide interactive visualizations over your data. This is a reprise of the information in my previous post on Log Analytics with Fusion, which used a small CSV file of cleansed server-log data. Now that I’ve got three logfile’s worth of data, I’ll create a similar dashboard.

The Fusion Dashboards tool is the rightmost icon on the Fusion UI launchpad page. It can be accessed directly as: http://localhost:8764/banana/index.html#/dashboard. When opened from the Fusion launchpad, the Dashboards tool displays in a new tab labeled “Banana 3″. Time-series dashboards show trends over time by using the timestamp field to aggregate query results. To create a time-series dashboard over the collection “MyFusionLogfiles” I click on the new page icon in the upper right-hand corner of top menu, and choose to create a new time-series dashboard, specifying “MyFusionLogfiles” as the collection, and “log4j_timestamp_tdt” as the field. I modify the default time-series dashboard, again, by adding a pie chart that shows the breakdown of logging events by priority:

Et voilà! It all just works!

The post Data Analytics using Fusion and Logstash appeared first on Lucidworks.

The post Lucidworks Fusion Now Available in AWS Marketplace appeared first on Lucidworks.

Spock: The logical thing for you to have done was to have left me behind. McCoy: Mr. Spock, remind me to tell you that I’m sick and tired of your logic.This is the third in a series of blog posts on a technique that I call Query Autofiltering – using the knowledge built into the search index itself to do a better job of parsing queries and therefore giving better answers to user questions. The first installment set the stage by arguing that a better understanding of language and how it is used when users formulate queries, can help us to craft better search applications – especially how adjectives and adverbs – which can be thought of as attributes or properties of subject or action words (nouns and verbs) – should be made to refine rather than to expand search results – and why the OOTB search engine doesn’t do this correctly. Solving this at the language level is a hard problem. A more tractable solution involves leveraging the descriptive information that we may have already put into our search indexes for the purposes of navigation and display, to parse or analyze the incoming query. Doing this enables us to produce results that more closely match the user’s intent. The second post describes an implementation approach using the Lucene FieldCache that can automatically detect when terms or phrases in a query are contained in metadata fields and to then use that information to construct more precise queries. So rather than searching and then navigating, the user just searches and finds (even if they are not feeling lucky). An interesting problem developed from this work – what to do when more than one term in a query matches the same metadata field? It turns out that the answer is one of the favorite phrases of software consultants – “It Depends”. It depends on whether the field is single or multi valued. Understanding why this is so leads to a very interesting insight – logic in language is not ambiguous, it is contextual, and part of the context is knowing what type of field we are talking about. Solving this enables us to respond correctly to boolean terms (“and” and “or”) in user queries, rather than simply ignoring them (by treating them as stop words) as we typically do now.

SolrIndexSearcher searcher = rb.req.getSearcher();

IndexSchema schema = searcher.getSchema();

SchemaField field = schema.getField( fieldName );

boolean useAnd = field.multiValued() && useAndForMultiValuedFields;

// if query has 'or' in it and or is at a position

// 'within' the values for this field ...

if (useAnd) {

for (int i = termPosRange[0] + 1; i < termPosRange[1]; i++ ) {

String qToken = queryTokens.get( i );

if (qToken.equalsIgnoreCase( "or" )) {

useAnd = false;

break;

}

}

}

StringBuilder qbldr = new StringBuilder( );

for (String val : valList ) {

if (qbldr.length() > 0) qbldr.append( (useAnd ? " AND " : " OR ") );

qbldr.append( val );

}

return fieldName + ":(" + qbldr.toString() + ")" + suffix;

The full source code for the QueryAutofilteringComponent is available on github for both Solr 4.x and Solr 5.x. (Due to API changes introduced in Solr 5.0, two versions of this code are needed.)

<doc>

<field name="id">17</field>

<field name="product_type">boxer shorts</field>

<field name="product_category">underwear</field>

<field name="color">white</field>

<field name="brand">Fruit of the Loom</field>

<field name="consumer_type">mens</field>

</doc>

. . .

<doc>

<field name="id">95</field>

<field name="product_type">sweatshirt</field>

<field name="product_category">shirt</field>

<field name="style">V neck</field>

<field name="style">short-sleeve</field>

<field name="brand">J Crew Factory</field>

<field name="color">grey</field>

<field name="material">cotton</field>

<field name="consumer_type">womens</field>

</doc>

. . .

<doc>

<field name="id">154</field>

<field name="product_type">crew socks</field>

<field name="product_category">socks</field>

<field name="color">white</field>

<field name="brand">Joe Boxer</field>

<field name="consumer_type">mens</field>

</doc>

. . .

<doc>

<field name="id">135</field>

<field name="product_type">designer jeans</field>

<field name="product_category">pants</field>

<field name="brand">Calvin Klein</field>

<field name="color">blue</field>

<field name="style">pre-washed</field>

<field name="style">boot-cut</field>

<field name="consumer_type">womens</field>

</doc>

The dataset contains built-in ambiguities in which a single token can occur as part of a product type, brand name, color or style. Color names are good examples of this but there are others (boxer shorts the product vs Joe Boxer the brand). The ‘style’ field is multi-valued. Here is the schema.xml definitions of the fields:

<field name="brand" type="string" indexed="true" stored="true" multiValued="false" /> <field name="color" type="string" indexed="true" stored="true" multiValued="false" /> <field name="colors" type="string" indexed="true" stored="true" multiValued="true" /> <field name="material" type="string" indexed="true" stored="true" multiValued="false" /> <field name="product_type" type="string" indexed="true" stored="true" multiValued="false" /> <field name="product_category" type="string" indexed="true" stored="true" multiValued="false" /> <field name="consumer_type" type="string" indexed="true" stored="true" multiValued="false" /> <field name="style" type="string" indexed="true" stored="true" multiValued="true" /> <field name="made_in" type="string" indexed="true" stored="true" multiValued="false" />To make these string fields searchable from a “freetext” – box-and-a-button query (e.g. q=red socks ), the data is copied to the catch-all text field ‘text':

<copyField source="color" dest="text" /> <copyField source="colors" dest="text" /> <copyField source="brand" dest="text" /> <copyField source="material" dest="text" /> <copyField source="product_type" dest="text" /> <copyField source="product_category" dest="text" /> <copyField source="consumer_type" dest="text" /> <copyField source="style" dest="text" /> <copyField source="made_in" dest="text" />The solrconfig file has these additions for the QueryAutoFilteringComponent and a request handler that uses it:

<requestHandler name="/autofilter" class="solr.SearchHandler">

<lst name="defaults">

<str name="echoParams">explicit</str>

<int name="rows">10</int>

<str name="df">description</str>

</lst>

<arr name="first-components">

<str>queryAutofiltering</str>

</arr>

</requestHandler>

<searchComponent name="queryAutofiltering" class="org.apache.solr.handler.component.QueryAutoFilteringComponent" />

Example 1: “White Linen perfume”

There are many examples of this query problem in the data set where a term such as “white” is ambiguous because it can occur in a brand name and as a color, but this one has two ambiguous terms “white” and “linen” so it is a good example of how the autofiltering parser works. The phrase “White Linen” is known from the dataset to be a brand and “perfume” maps to a product type, so the basic autofiltering algorithm would match “White” as a color, then reject that for “White Linen” as a brand – since it is a longer match. It will then correctly find the item “White Linen perfume”. However, what if I search for “white linen shirts”? In this case, the simple algorithm won’t match because it will fail to provide the alternative parsing “color:white AND material:linen”. That is, now the phrase “White Linen” is ambiguous. In this case, an additional bit of logic is applied to see if there is more than one possible parsing of this phrase, so in this case, the parser produces the following query:

((brand:"White Linen" OR (color:white AND material:linen)) AND product_category:shirts)Since there are no instances of shirts made by White Linen (and if there were, the result would still be correct), we just get shirts back. Similarly for the perfume, since perfume made from linen doesn’t exist, we only get the one product. That is, some of the filtering here is done in the collection. The parser doesn’t know what makes “sense” at the global level and what doesn’t, but the dataset does – so between the two, we get the right answer. Example 2: “white and grey dress shirts” In this case, I have created two color fields, “color” which is used for solid-color items and is single valued and “colors” which is used for multicolored items (like striped or patterned shirts) and is multi valued. So if I have dress shirts in the data set that are solid-color white and solid-color grey and also striped shirts that are grey and white stripes and I search for “white and grey dress shirts”, my intent is interpreted by the autofiltering parser as “show me solid-color shirts in both white and grey or multi-colored shirts that have both white and grey in them”. This is the boolean query that it generates:

((product_type:"dress shirt" OR ((product_type:dress OR product_category:dress) AND (product_type:shirt OR product_category:shirt))) AND (color:(white OR grey) OR colors:(white AND grey)))(Note that it also creates a redundant query for dress and shirt since “dress” is also a product type – but this query segment returns no results since no item is both a “dress” and a “shirt” – so it is just a slight performance waster). If I don’t want the solid colors, I can search for “striped white and grey dress shirts” and get just those items ( or use the facets). (We could also have a style like “multicolor” vs “solid color” to disambiguate but that may not be too intuitive.) In this case, the query that the autofilter generates looks like this:

((product_type:"dress shirt" OR ((product_type:dress OR product_category:dress) AND (product_type:shirt OR product_category:shirt))) AND (color:(white OR grey) OR colors:(white AND grey)) AND style:striped)Suffice it to say that the out-of-the-box /select request handler doesn’t do any of this. To be fair, it does a good job of relevance ranking for these examples, but its precision (percentage of true or correct positives) is very poor. You can see this by comparing the number of results that you get with the /select handler vs. the /autofilter handler – in terms of precision, its night and day. But is this dataset “too contrived” to be of real significance? For eCommerce data, I don’t think so, many of these examples are real-world products and marketing data is rife with ambiguities that standard relevance algorithms operating at the single token level simply can’t address. The autofilter deals with ambiguity by noting that phrases tend to be less ambiguous than terms, but goes further by providing alternate parsing when the phrase is ambiguous. We want to remove ambiguities that stem from the tokenization that we do on the documents and queries – we cannot remove real ambiguities, rather we need to respond to them appropriately.

The post Query Autofiltering Extended – On Language and Logic in Search appeared first on Lucidworks.

json.facet={

unique_products : "unique(product_type)"

}

top_authors : {

terms : {

field:author,

limit:5

}

}

is equivalent to this request in 5.2:

top_authors : {

type:terms,

field:author,

limit:5

}

prices:{

type:range,

field:price,

mincount:1,

start:0,

end:100,

gap:10

}

q=cars

&fq={!tag=COLOR}color:black

&fq={!tag=MODEL}model:xc90

&json.facet={

colors:{type:terms, field:color, excludeTags=COLOR},

model:{type:terms, field:model, excludeTags=MODEL}

}

The above example shows a request where a user selected “color:black”. This query would do the following:

json.facet={

unique_products : "hll(product_type)"

}

The post What’s new in Apache Solr 5.2 appeared first on Lucidworks.

The post Indexing Performance in Solr 5.2 (now twice as fast) appeared first on Lucidworks.

“Thankfully, open source is chock full of high-quality libraries to solve common problems in text processing like sentiment analysis, topic identification, automatic labeling of content, and more. More importantly, open source also provides many building block libraries that make it easy for you to innovate without having to reinvent the wheel. If all of this stuff is giving you flashbacks to your high school grammar classes, not to worry—we’ve included some useful resources at the end to brush up your knowledge as well as explain some of the key concepts around natural language processing (NLP). To begin your journey, check out these projects.“

Read all of Grant’s columns on Opensource.com or follow him on Twitter.The post Top 5 Open Source Natural Language Processing Libraries appeared first on Lucidworks.

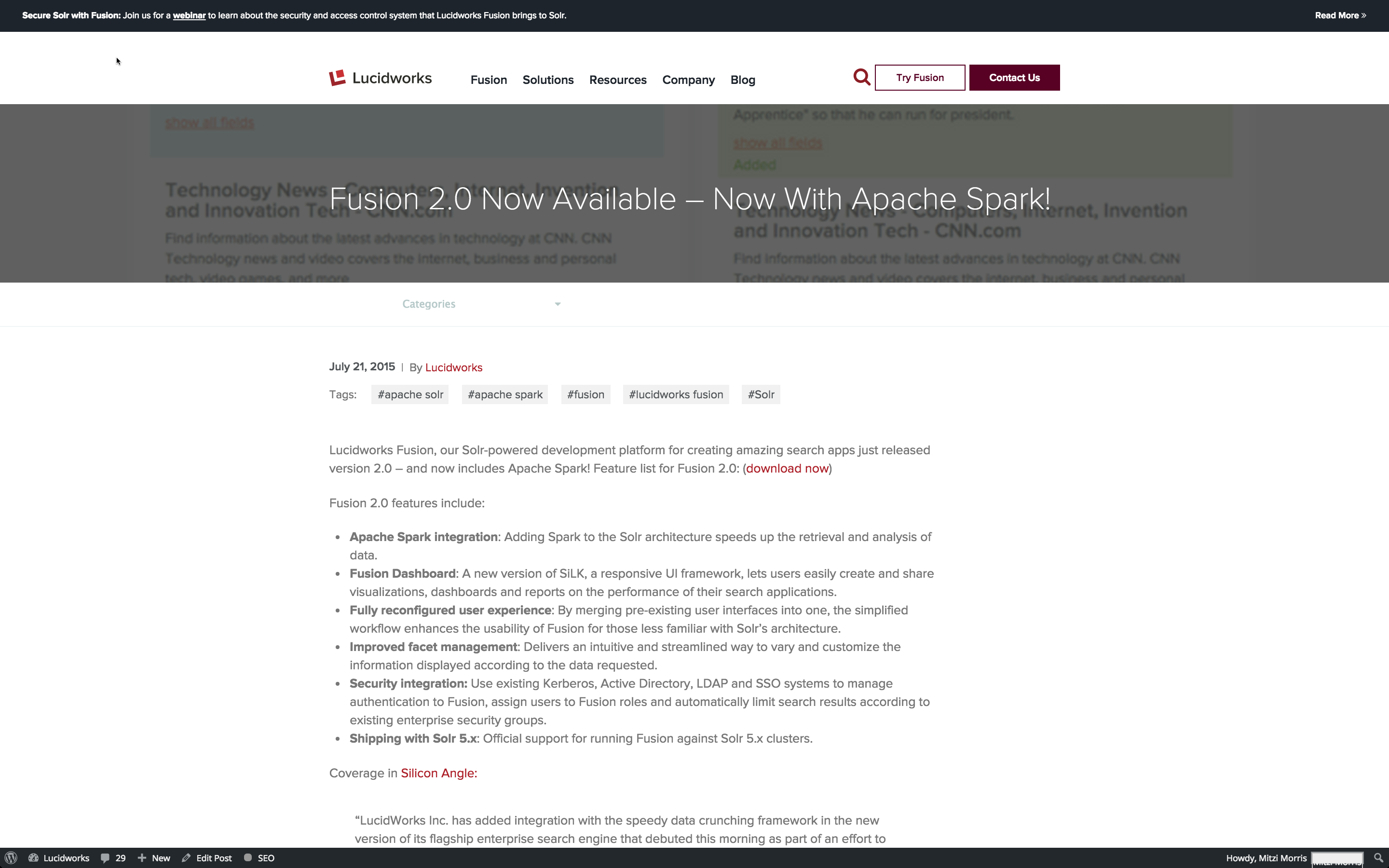

“LucidWorks Inc. has added integration with the speedy data crunching framework in the new version of its flagship enterprise search engine that debuted this morning as part of an effort to catch up with the changing requirements of CIOs embarking on analytics projects. .. That’s thanks mainly to its combination of speed and versatility, which Fusion 2.0 harnesses to try and provide more accurate results for queries run against unstructured data. More specifically, the software – a commercial implementation of Solr, one of the most popular open-source search engines for Hadoop, in turn the de facto storage backend for Spark – uses the project’s native machine learning component to help hone the relevance of results.”Coverage in Software Development Times:

Fusion 2.0’s Spark integration within its data-processing layer enables real-time analytics within the enterprise search platform, adding Spark to the Solr architecture to accelerate data retrieval and analysis. Developers using Fusion now also have access to Spark’s store of machine-learning libraries for data-driven analytics. “Spark allows users to leverage real-time streams of data, which can be accessed to drive the weighting of results in search applications,” said Lucidworks CEO Will Hayes. “In regards to enterprise search innovation, the ability to use an entirely different system architecture, Apache Spark, cannot be overstated. This is an entirely new approach for us, and one that our customers have been requesting for quite some time.”Press release on MarketWired.

The post Fusion 2.0 Now Available – Now With Apache Spark! appeared first on Lucidworks.

Lucidworks Fusion is a platform for data engineering, built on Solr/Lucene, the Apache open source search engine, which is fast, scalable, proven, and reliable. Fusion uses the Solr/Lucene engine to evaluate search requests and return results in the form of a ranked list of document ids. It gives you the ability to slice and dice your data and search results, which means that you can have Google-like search over your data, while maintaining control of both your data and the search results.

The difference between data science and data engineering is the difference between theory and practice. Data engineers build applications given a goal and constraints. For natural language search applications, the goal is to return relevant search results given an unstructured query. The constraints include: limited, noisy, and/or downright bad data and search queries, limited computing resources, and penalties for returning irrelevant or partial results.

As a data engineer, you need to understand your data and how Fusion uses it in search applications. The hard part is understanding your data. In this post, I cover the key building blocks of Fusion search.

Fusion extends Solr/Lucene functionality via a REST-API and a UI built on top of that REST-API. The Fusion UI is organized around the following key concepts:

Lucene started out as a search engine designed for following information retrieval task: given a set of query terms and a set of documents, find the subset of documents which are relevant for that query. Lucene provides a rich query language which allows for writing complicated logical conditions. Lucene now encompasses much of the functionality of a traditional DBMS, both in the kinds of data it can handle and the transactional security it provides.

Lucene maps discrete pieces of information, e.g., words, dates, numbers, to the documents in which they occur. This map is called an inverted index because the keys are document elements and the values are document ids, in contrast to other kinds of datastores where document ids are used as a key and the values are the document contents. This indexing strategy means that search requires just one lookup on an inverted index, as opposed to a document oriented search which would require a large number of lookups, one per document. Lucene treats a document as a list of named, typed fields. For each document field, Lucene builds an inverted index that maps field values to documents.

Lucene itself is a search API. Solr wraps Lucene in an web platform. Search and indexing are carried out via HTTP requests and responses. Solr generalizes the notion of a Lucene index to a Solr collection, a uniquely named, managed, and configured index which can be distributed (“sharded”) and replicated across servers, allowing for scalability and high availability.

The following sections show how the above set of key concepts are realized in the Fusion UI.

Fusion collections are Solr collections which are managed by Fusion. Fusion can manage as many collections as you need, want, or both. On initial login, the Fusion UI prompts you to choose or create a collection. On subsequent logins, the Fusion UI displays an overview of your collections and system collections:

The above screenshot shows the Fusion collections page for an initial developer installation, just after initial login and creation of a new collection called “my_collection”, which is circled in yellow. Clicking on this circled name leads to the “my_collection” collection home page:

The collection home page contains controls for both search and indexing. As this collection doesn’t yet contain any documents, the search results panel is empty.

Bootstrapping a search app requires an initial indexing run over your data, followed by successive cycles of search and indexing until you have a search app that does what you want it to do and what search users expect it to do. The collections home page indexing toolset contains controls for defining and using datasources and pipelines.

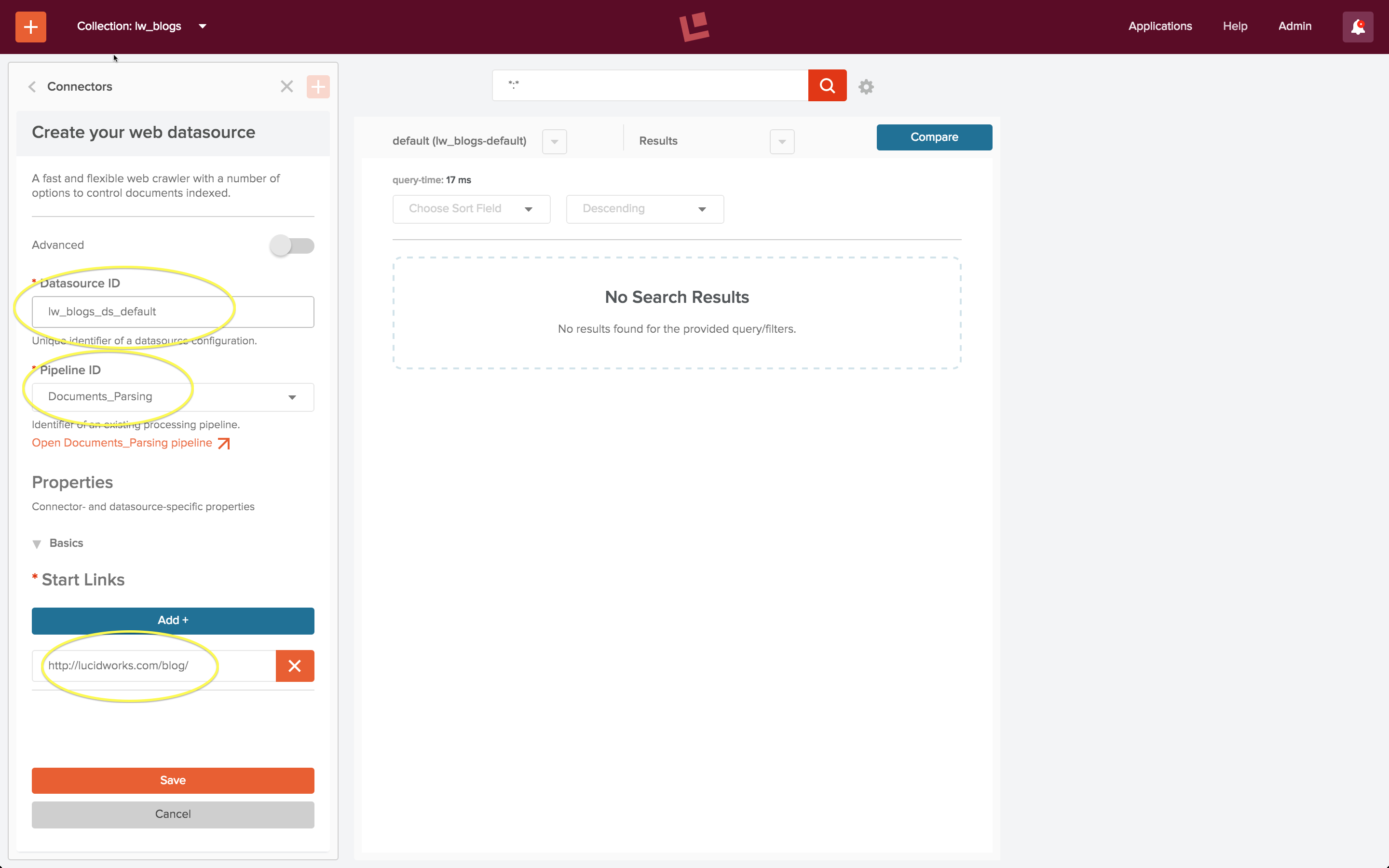

Once you have created a collection, clicking on the “Datasource” control changes the left hand side control panel over to the datasource configuration panel. The first step in configuring a datasource is specifying the kind of data repository to connect to. Fusion connectors are a set of programs which do the work of connecting to and retrieving data from specific repository types. For example, to index a set of web pages, a datasource uses a web connector.

To configure the datasource, choose the “edit” control. The datasource configuration panel controls the choice of indexing pipeline. All datasources are pre-configured with a default indexing pipeline. The “Documents_Parsing” indexing pipeline is the default pipeline for use with a web connector. Beneath the pipeline configuration control is a control “Open <pipeline name> pipeline”. Clicking on this opens a pipeline editing panel next to the datasource configuration panel:

Once the datasource is configured, the indexing job is run by controls on the datasource panel:

The “Start” button, circled in yellow, when clicked, changes to “Stop” and “Abort” controls. Beneath this button is a “Show details”/”Hide details” control, shown in its open state.

Creating and maintaining a complete, up-to-date index over your data is necessary for good search. Much of this process consists of data munging. Connectors and pipelines make this chore manageable, repeatable, and testable. It can be automated using Fusion’s job scheduling and alerting mechanisms.

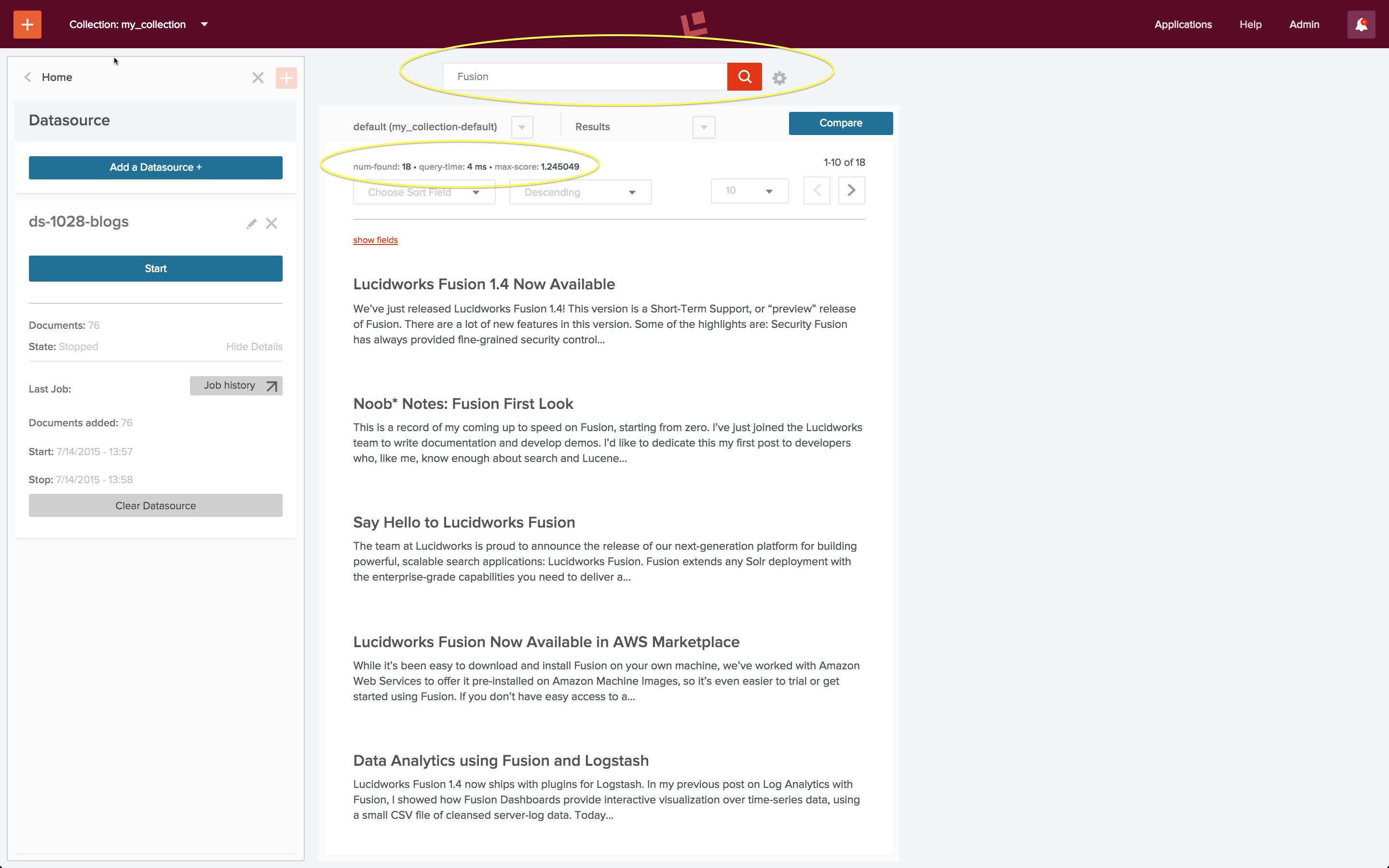

Once a datasource has been configured and the indexing job is complete, the collection can be searched using the search results tool. A wildcard query of “:” will match all documents in the collection. The following screenshot shows the result of running this query via the search box at the top of the search results panel:

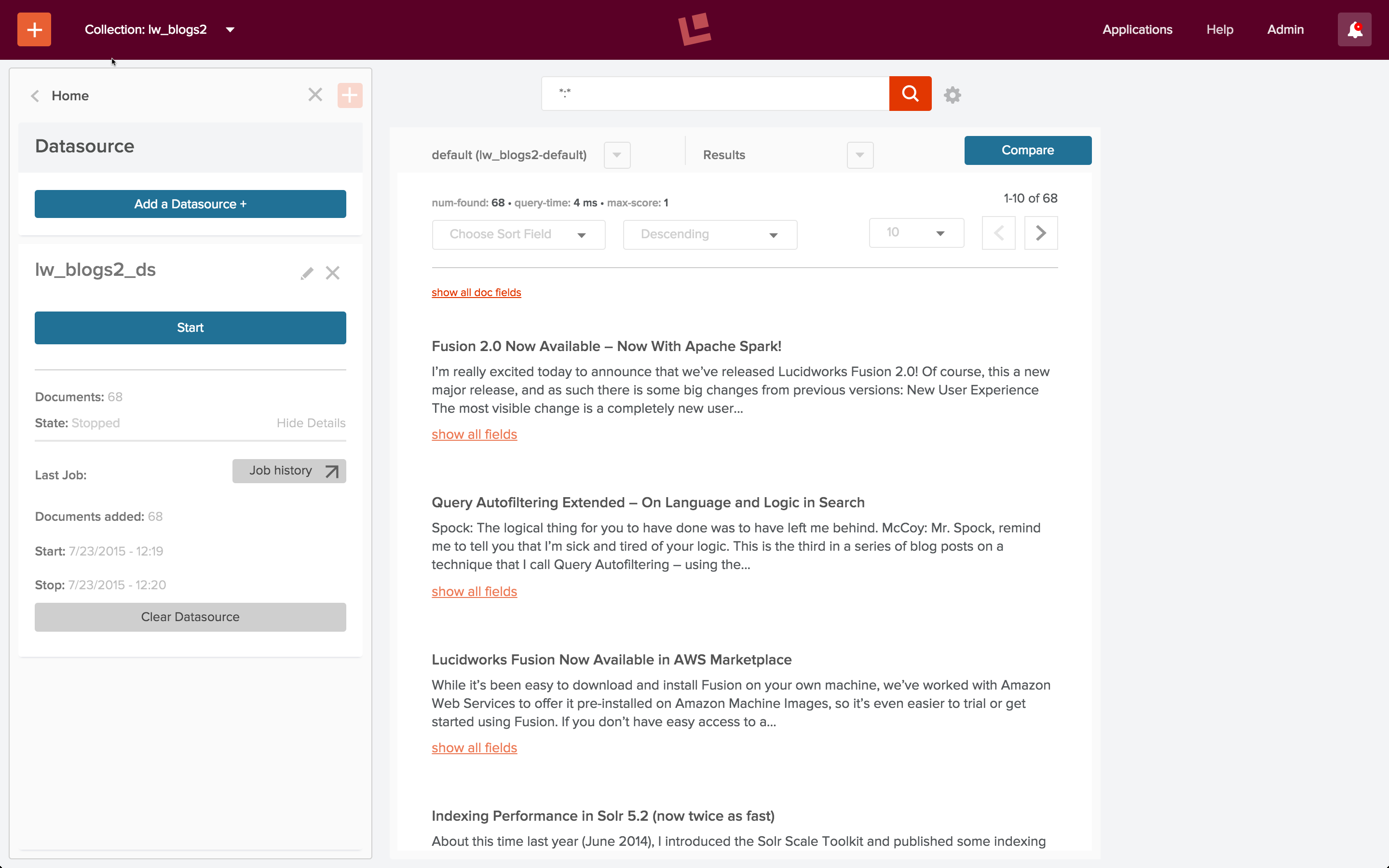

After running the datasource exactly once, the collection consists of 76 posts from the Lucidworks blog, as indicated by the “Last Job” report on the datasource panel, circled in yellow. This agrees with the “num found”, also circled in yellow, at the top of the search results page.

The search query “Fusion” returns the most relevant blog posts about Fusion:

There are 18 blog posts altogether which have the word “Fusion” either in the title or body of the post. In this screenshot we see the 10 most relevant posts, ranked in descending order.

A search application takes a user search query and returns search results which the user deems relevant. A well-tuned search application is one where the both the user and the system agree on both the set of relevant documents returned for a query and the order in which they are ranked. Fusion’s query pipelines allow you to tune your search and the search results tool lets you test your changes.

Because this post is a brief and gentle introduction to Fusion, I omitted a few details and skipped over a few steps. Nonetheless, I hope that this introduction to the basics of Fusion has made you curious enough to try it for yourself.

The post Search Basics for Data Engineers appeared first on Lucidworks.

Lucidworks Fusion is the platform for search and data engineering. In article Search Basics for Data Engineers, I introduced the core features of Lucidworks Fusion 2 and used it to index some blog posts from the Lucidworks blog, resulting in a searchable collection. Here is the result of a search for blog posts about Fusion:

Bootstrapping a search app requires an initial indexing run over your data, followed by successive cycles of search and indexing until your application does what you want it to do and what search users expect it to do. The above search results required one iteration of this process. In this article, I walk through the indexing and query configurations used to produce this result.

Indexing web data is challenging because what you see is not always what you get. That is, when you look at a web page in a browser, the layout and formatting is guiding your eye, making important information more prominent. Here is what a recent Lucidworks blog entry looks like in my browser:

There are navigational elements at the top of the page, but the most prominent element is the blog post title, followed by the elements below it: date, author, opening sentence, and the first visible section heading below that.

I want my search app to be able to distinguish which information comes from which element, and be able to tune my search accordingly. I could do some by-hand analysis of one or more blog posts, or I could use Fusion to index a whole bunch of them; I choose the latter option.

For the first iteration, I use the Fusion defaults for search and indexing. I create a collection "lw_blogs" and configure a datasource "lw_blogs_ds_default". Website access requires use of the Anda-web datasource, and this datasource is pre-configured to use the "Documents_Parsing" index pipeline.

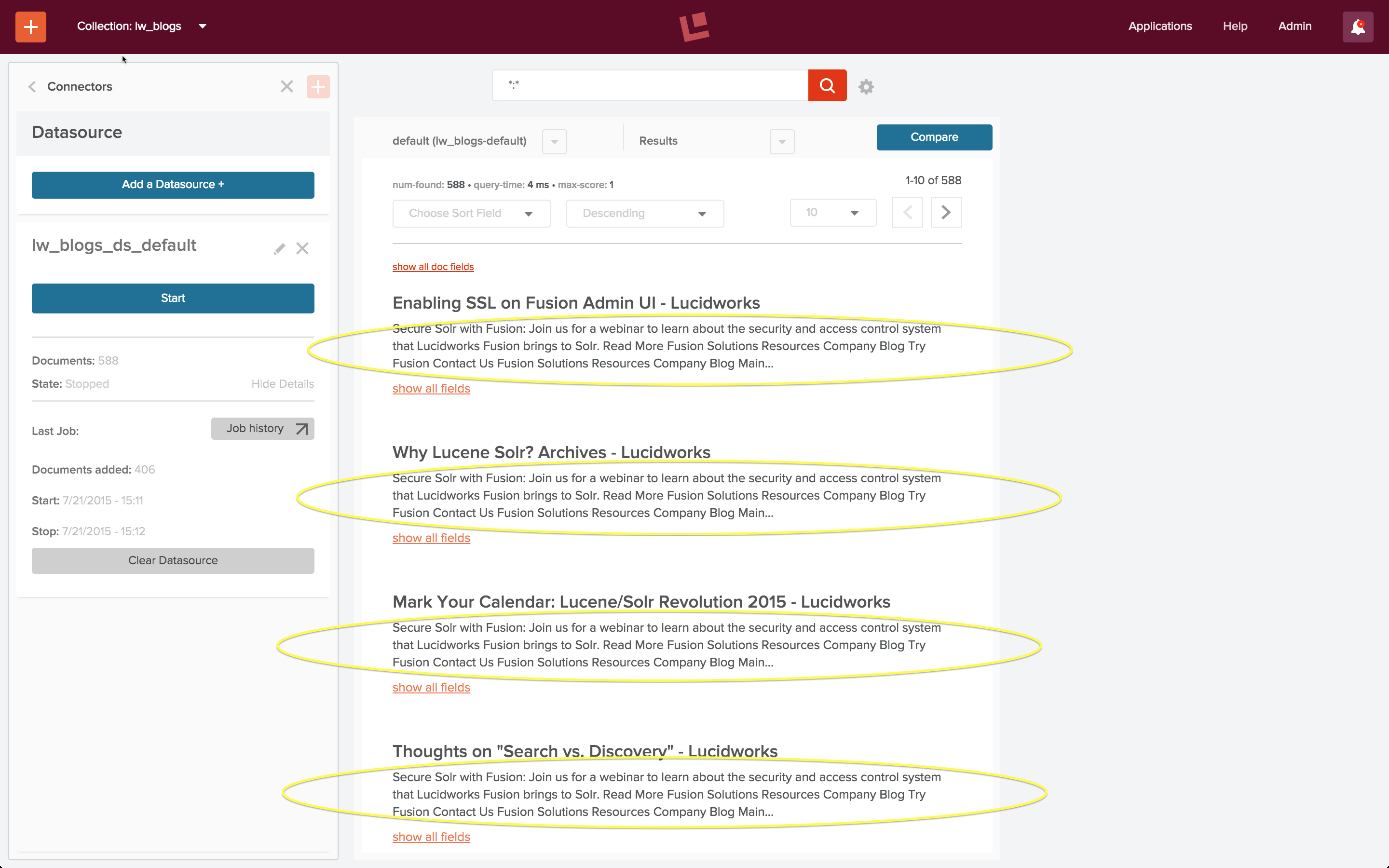

I start the job, let it run for a few minutes, and then stop the web crawl. The search panel is pre-populated with a wildcard search using the default query pipeline. Running this search returns the following result:

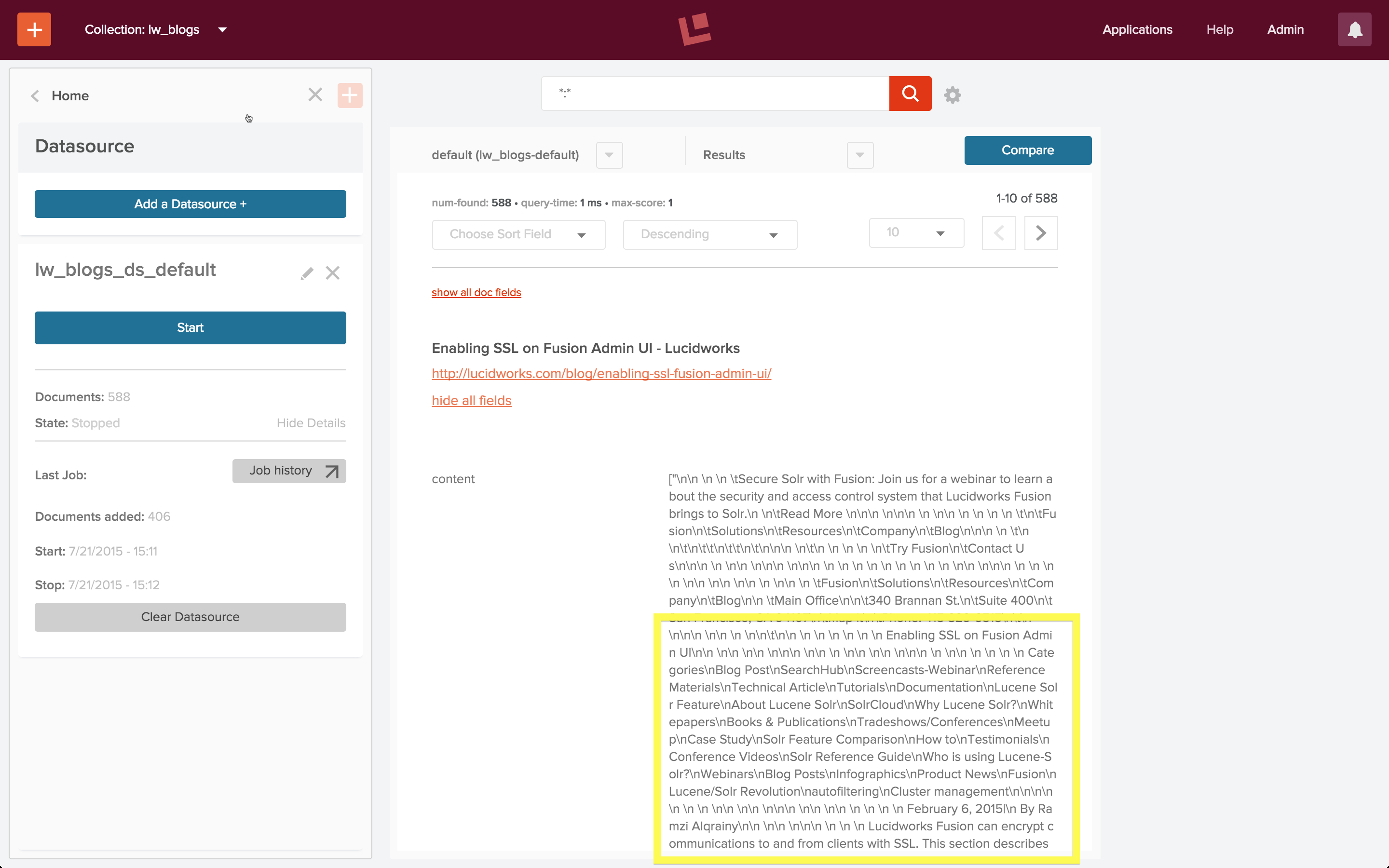

At first glance, it looks like all the documents in the index contain the same text, despite having different titles. Closer inspection of the content of individual documents shows that this is not what’s going on. I use the "show all fields" control on the search results panel and examine the contents of field "content":

Reading further into this field shows that the content does indeed correspond to the blog post title, and that all text in the body of the HTML page is there. The Apache Tika parser stage extracted the text from all elements in the body of the HTML page and added it to the "content" field of the document, including all whitespace between and around nested elements, in the order in which they occur in the page. Because all the blog posts contain a banner announcement at the top and a set of common navigation elements, all of them have the same opening text:

\n\n \n \n \tSecure Solr with Fusion: Join us for a webinar to learn about the security and access control system that Lucidworks Fusion brings to Solr.\n \n\tRead More \n\n\n \n\n\n \n \n\n \n \n \n \n \t\n\tFusion\n ...

This first iteration shows me what’s going on with the data, however it fails to meet the requirement of being able to distinguish which information comes from which element, resulting in poor search results.

Iteration one used the "Documents_Parsing" pipeline, which consists of the following stages:

In order to capture the text from a particular HTML element, I need to add an HTML transform stage to my pipeline. I still need to have an Apache Tika parser stage as the first stage in my pipeline in order to transform the raw bytes sent across the wire by the web crawler via HTTP into an HTML document. But instead of using the Tika HTML parser to extract all text from the HTML body into a single field, I use the HTML transform stage to harvest elements of interest each into its own field. As a first cut at the data, I’ll use just two fields: one for the blog title and the other for the blog text.

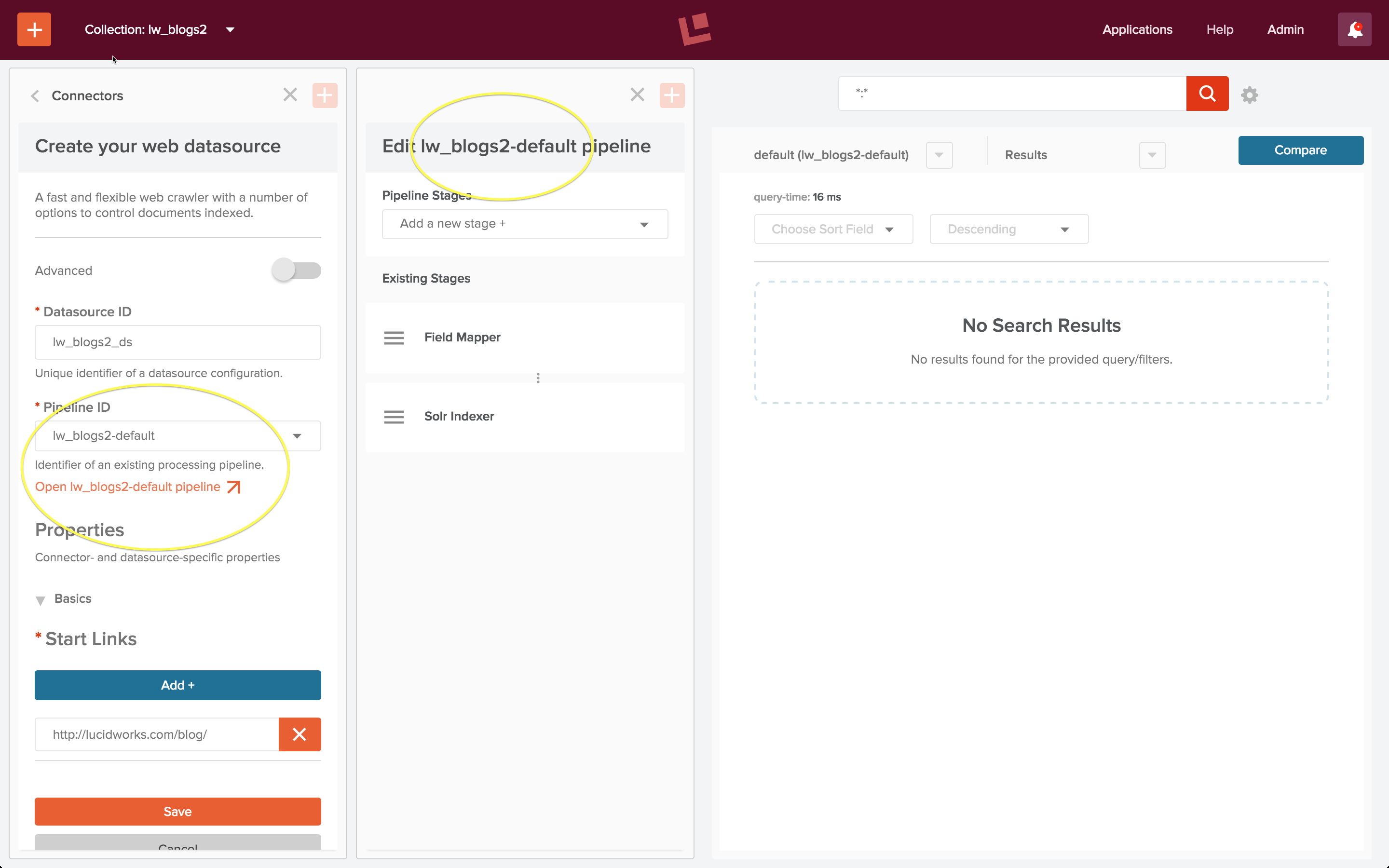

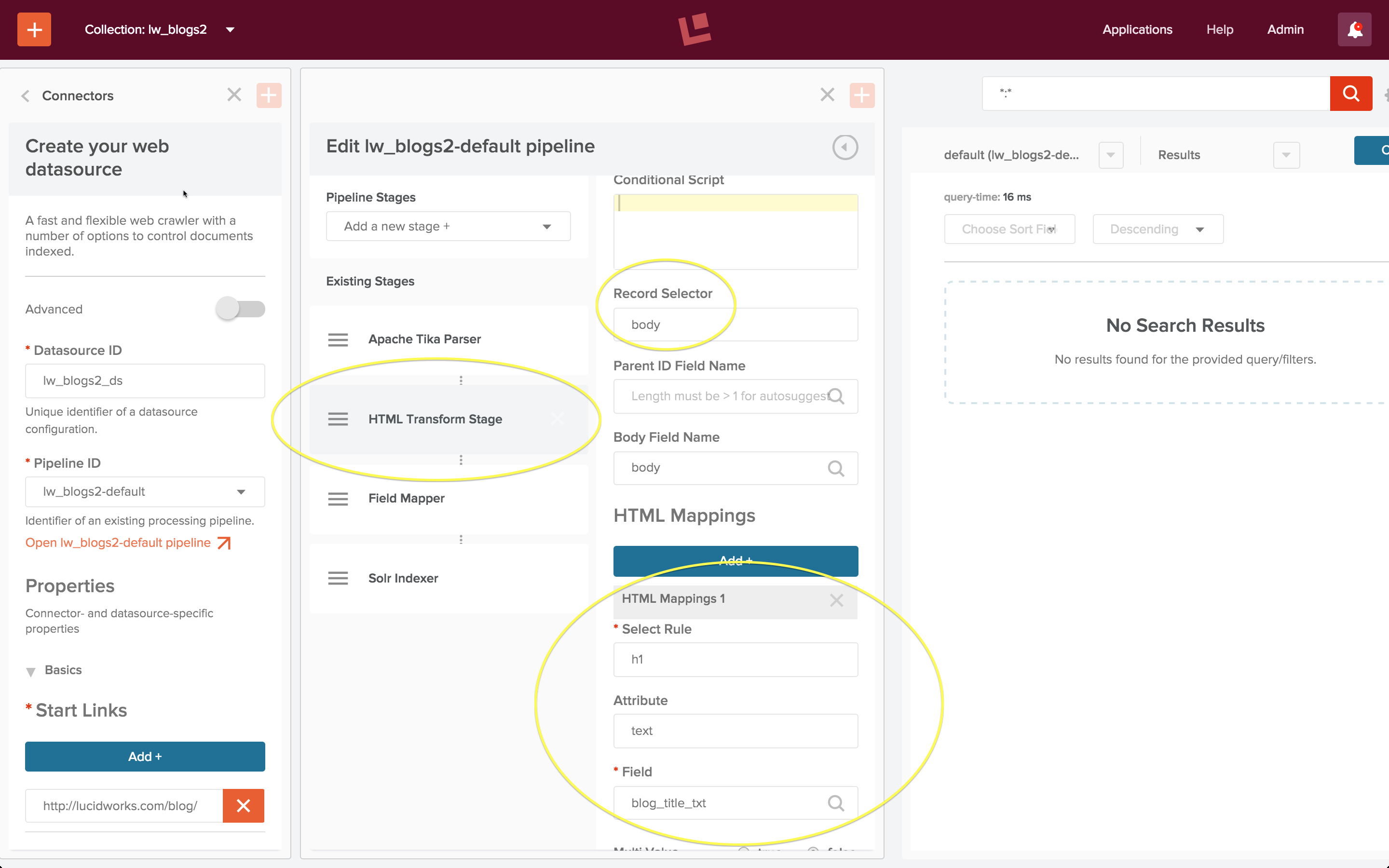

I create a second collection "lw_blogs2", and configure another web datasource, "lw_blogs2_ds". When Fusion creates a collection, it also creates an indexing and query pipeline, using the naming convention collection name plus "-default" for both pipelines. I choose the index pipeline "lw_blogs2-default", and open the pipeline editor panel in order to customize this pipeline to process the Lucidworks blog posts:

The initial collection-specific pipeline is configured as a "Default_Data" pipeline: it consists of a Field Mapper stage followed by a Solr Indexer stage.

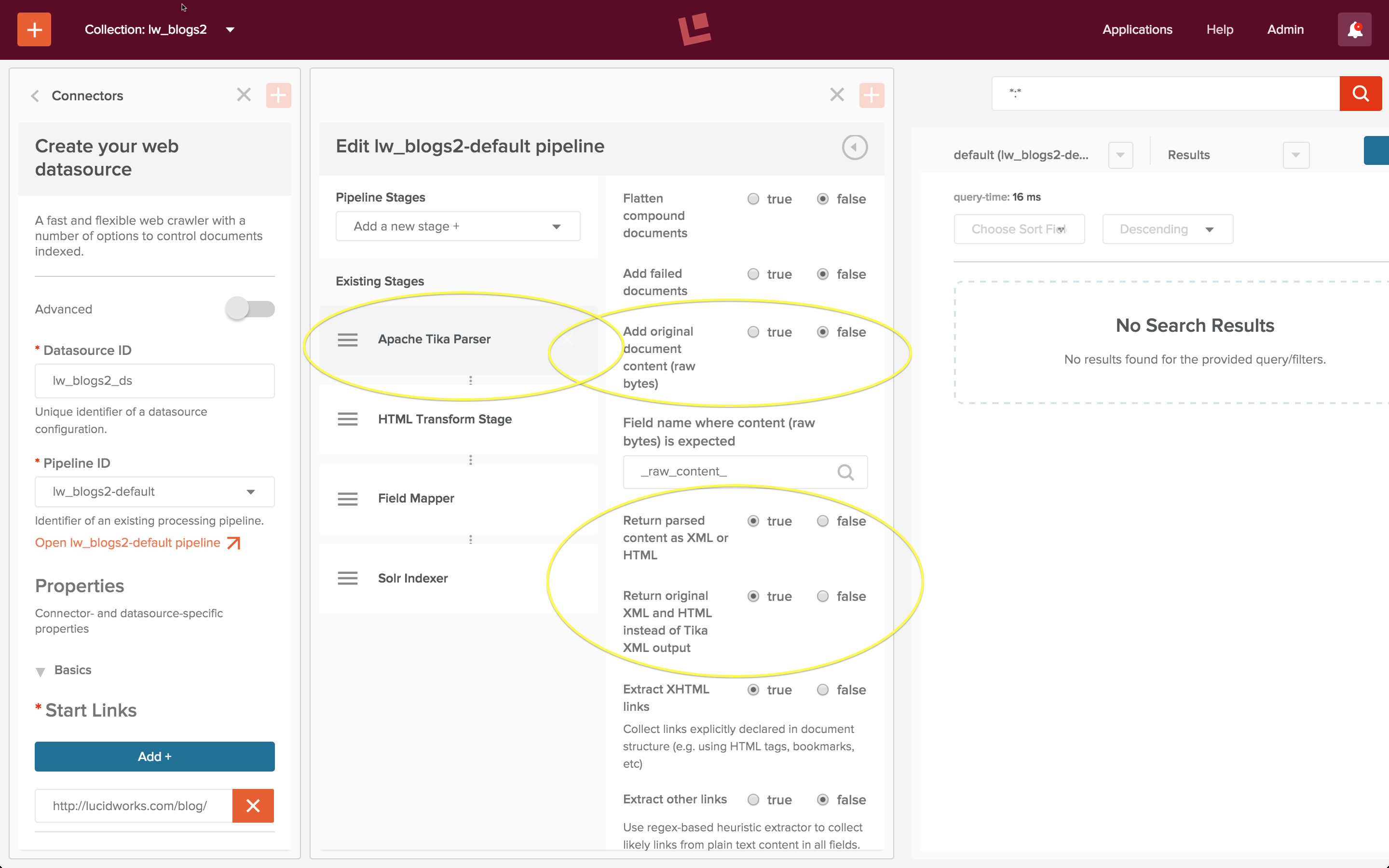

Adding new stages to an index pipeline pushes them onto the pipeline stages stack, therefore first I add and HTML Transform stage then I add an Apache Tika parser stage, resulting in a pipeline which starts with an Apache Tika Parser stage followed by an HTML Transform stage. First I edit the Apache Tika Parser stage as follows:

When using an Apache Tika parser stage in conjunction with an HTML or XML Transform stage the Tika stage must be configured:

With these settings, Tika transforms the raw bytes retrieved by the web crawler into an HTML document. The next stage is an HTML Transform stage which extracts the elmenets of interest from the body of the HTML document:

An HTML transform stage requires the following configuration:

Here the "Record Selector" property "body" is the same as the default "Body Field Name" because each blog post contains is a single Solr document. Inspection of the raw HTML shows that the blog post title is in an "h1" element, therefore the mapping rule shown above specifies that the text contents of tag "h1" is mapped to the document field named "blog_title_txt". The post itself is inside a tag "article", so the second mapping rule, not shown, specifies:

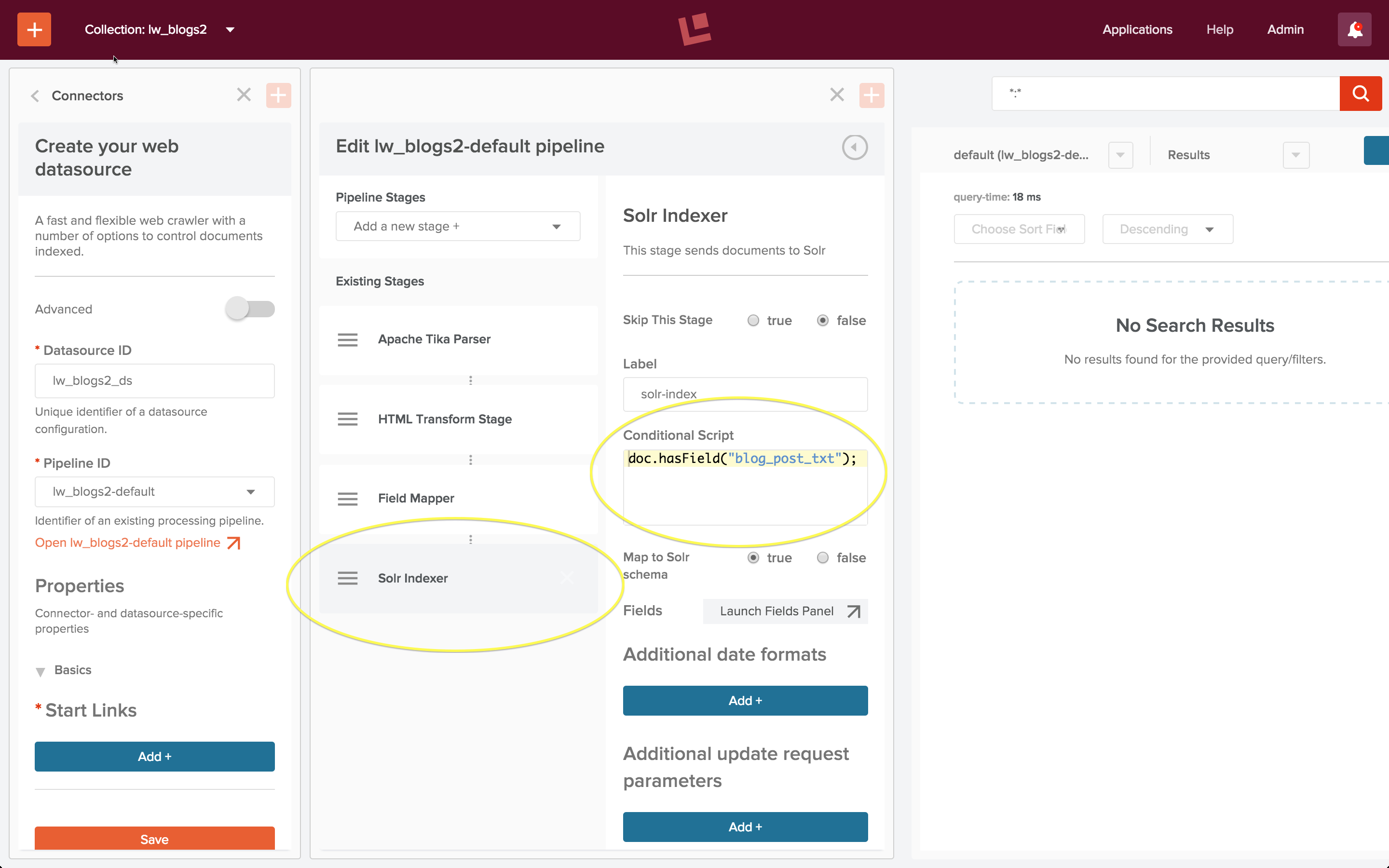

The web crawl also pulled back many pages which contain summaries of ten blog posts but which don’t actually contain a blog post. These are not interesting, therefore I’d like to restrict indexing to only documents which contain a blog post. To do this, I add a condition to the Solr Indexer stage:

I start the job, let it run for a few minutes, and then stop the web crawl. I run a wildcard search, and it all just works!

To test search, I do a query on the words "Fusion Spark". My first search returns no results. I know this is wrong because the articles pulled back by the wildcard search above mention both Fusion and Spark.

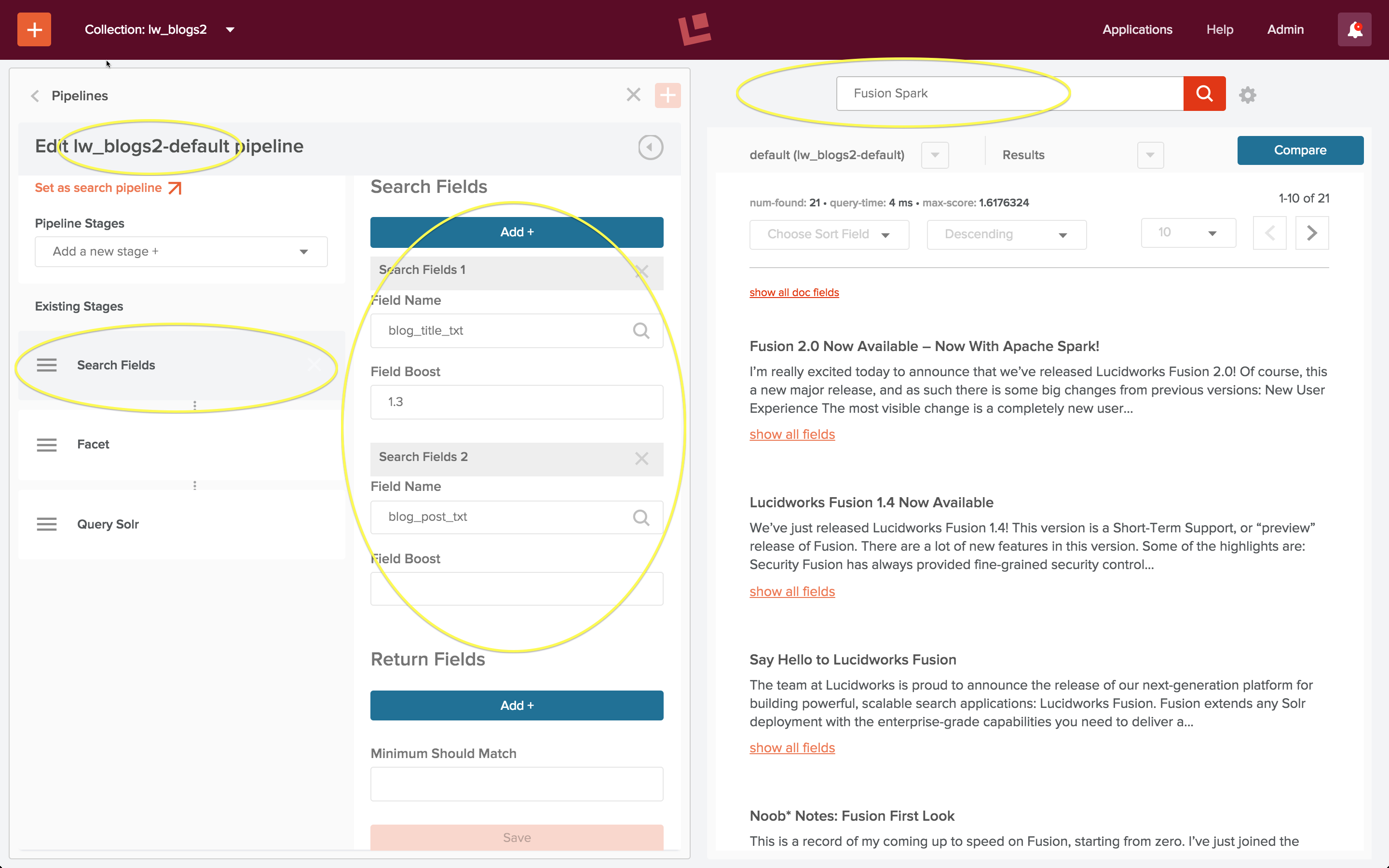

The reason for this apparent failure is that search is over document fields. The blog title and blog post content are now stored in document fields named "blog_title_txt" and "blog_post_txt". Therefore, I need to configure the "Search Fields" stage of the query pipeline to specify that these are search fields.

The left-hand collection home page control panel contains controls for both search and indexing. I click on the "Query Pipelines" control under the "Search" heading, and choose to edit the pipeline named "lw_blogs2-default". This is the query pipeline that was created automatically when the collection "lw_blogs2" was created. I edit the "Search Fields" stage and specify search over both fields. I also set a boost factor of 1.3 on the field "blog_title_txt", so that documents where there’s a match on the title are considered more relevant that documents where there’s a match in the blog post. As soon as I save this configuration, the search is re-run automatically:

The results look good!

As a data engineer, your mission, should you accept it, is to figure out how to build a search application which bridges the gap between the information in the raw search query and what you know about your data in order to to serve up the document(s) which should be at the top of the results list. Fusion’s default search and indexing pipelines are a quick and easy way to get the information you need about your data. Custom pipelines make this mission possible.

The post Preliminary Data Analysis with Fusion 2: Look, Leap, Repeat appeared first on Lucidworks.

This is the first in a three part series demonstrating the ease and succinctness possible with Solr out of the box. The three parts to this are:

bin/post tool was created to allow you to more easily index data and documents. This article illustrates and explains how to use this tool.

For those (pre-5.0) Solr veterans who have most likely run Solr’s “example”, you’ll be familiar withLet’s get started by firing up Solr and creating a collection:post.jar, under example/exampledocs. You may have only used it when firing up Solr for the first time, indexing example tech products or book data. Even if you haven’t been usingpost.jar, give this new interface a try if even for the occasional sending of administrative commands to your Solr instances. See below for some interesting simple tricks that can be done using this tool.

$ bin/solr start $ bin/solr create -c solr_docsThe

bin/post tool can index a directory tree of files, and the Solr distribution has a handy docs/ directory to demonstrate this capability:

$ bin/post -c solr_docs docs/ java -classpath /Users/erikhatcher/solr-5.3.0/dist/solr-core-5.3.0.jar -Dauto=yes -Dc=solr_docs -Ddata=files -Drecursive=yes org.apache.solr.util.SimplePostTool /Users/erikhatcher/solr-5.3.0/docs/ SimplePostTool version 5.0.0 Posting files to [base] url http://localhost:8983/solr/solr_docs/update... Entering auto mode. File endings considered are xml,json,csv,pdf,doc,docx,ppt,pptx,xls,xlsx,odt,odp,ods,ott,otp,ots,rtf,htm,html,txt,log Entering recursive mode, max depth=999, delay=0s Indexing directory /Users/erikhatcher/solr-5.3.0/docs (3 files, depth=0) . . . 3575 files indexed. COMMITting Solr index changes to http://localhost:8983/solr/solr_docs/update... Time spent: 0:00:30.70530 seconds later we have Solr’s

docs/ directory indexed and available for searching. Foreshadowing to the next post in this series, check out http://localhost:8983/solr/solr_docs/browse?q=faceting to see what you’ve got.

Is there anything bin/post can do that clever curling can’t do? Not a thing, though you’d have to iterate over a directory tree of files or do web crawling and parsing out links to follow for entirely comparable capabilities. bin/post is meant to simplify the (command-line) interface for many common Solr ingestion and command needs.

-h help, with the abbreviated usage specification being:

$ bin/post -h

Usage: post -c <collection> [OPTIONS] <files|directories|urls|-d ["...",...]>

or post -help

collection name defaults to DEFAULT_SOLR_COLLECTION if not specified

...

See the full bin/post -h output for more details on parameters and example usages. A collection, or URL, must always be specified with -c (or by DEFAULT_SOLR_COLLECTION set in the environment) or -url. There are parameters to control the base Solr URL using -host, -port, or the full -url. Note that when using -url it must be the full URL, including the core name all the way through to the /update handler, such as -url http://staging_server:8888/solr/core_name/update.

bin/solr create -c my_docs bin/post -c my_docs ~/DocumentsThere’s a constrained list of file types (by file extension) that

bin/post will pass on to Solr, skipping the others. bin/post -h provides the default list used. To index a .png file, for example, set the -filetypes parameter: bin/post -c test -filetypes png image.png. To not skip any files, use “*” for the filetypes setting: bin/post -c test -filetypes "*" docs/ (note the double-quotes around the asterisk, otherwise your shell may expand that to a list of files and not operate as intended)

Browse and search your own documents at http://localhost:8983/solr/my_docs/browse

bin/post is very basic, single-threaded, and not intended for serious business. But it sure is fun to be able to fairly quickly index a basic web site and get a feel for the types of content processing and querying issues to face as a production scale crawler or other content acquisition means are in the works:

$ bin/solr create -c site $ bin/post -c site http://lucidworks.com -recursive 2 -delay 1 # (this will take some minutes)Web crawling adheres to the same content/file type filtering as the file crawling mentioned above; use

-filetypes as needed. Again, check out /browse; for this example try http://localhost:8983/solr/site/browse?q=revolution

$ bin/post -c collection_name data.csvCSV files are handed off to the /update handler with the content type of “text/csv”. It detects it is a CSV file by the .csv file extension. Because the file extension is used to pick the content type and it currently only has a fixed “.csv” mapping to text/csv, you will need to explicitly set the content

-type like this if the file has a different extension:

$ bin/post -c collection_name -type text/csv data.fileIf the delimited file does not have a first line of column names, some columns need excluding or name mapping, the file is tab rather than comma delimited, or you need to specify any of the various options to the CSV handler, the

-params option can be used. For example, to index a tab-delimited file, set the separator parameter like this:

$ bin/post -c collection_name data.tsv -type text/csv -params "separator=%09"The key=value pairs specified in

-params must be URL encoded and ampersand separated (tab is url encoded as %09). If the first line of a CSV file is data rather than column names, or you need to override the column names, you can provide the fieldnames parameter, setting header=true if the first line should be ignored:

$ bin/post -c collection_name data.csv -params "fieldnames=id,foo&header=true"Here’s a neat trick you can do with CSV data, add a “data source”, or some type of field to identify which file or data set each document came from. Add a

literal.<field_name>= parameter like this:

$ bin/post -c collection_name data.csv -params "literal.data_source=temp"Provided your schema allows for a data_source field to appear on documents, each file or set of files you load get tagged to some scheme of your choosing making it easy to filter, delete, and operate on that data subset. Another literal field name could be the filename itself, just be sure that the file being loaded matches the value of the field (easy to up-arrow and change one part of the command-line but not another that should be kept in sync).

bin/post -c collection_name data.json.

Arbitrary, non-Solr, JSON can be mapped as well. Using the exam grade data and example from here, the splitting and mapping parameters can be specified like this:

$ bin/post -c collection_name grades.json -params "split=/exams&f=first:/first&f=last:/last&f=grade:/grade&f=subject:/exams/subject&f=test:/exams/test&f=marks:/exams/marks&json.command=false"Note that

json.command=false had to be specified so the JSON is interpreted as data not as potential Solr commands.

bin/post -c collection_name data.xml.

Alas, there’s currently no splitting and mapping capabilities for arbitrary XML using bin/post; use Data Import Handler with the XPathEntityProcessor to accomplish this for now. See SOLR-6559 for more information on this future enhancement.

bin/post can also be used to issue commands to Solr. Here are some examples:

bin/post -c collection_name -out yes -type application/json -d '{commit:{}}'

Note: For a simple commit, no data/command string is actually needed. An empty, trailing -d suffices to force a commit, like this – bin/post -c collection_name -dbin/post -c collection_name -type application/json -out yes -d '{delete: {id: 1}}'bin/post -c test -type application/json -out yes -d '{delete: {query: "data_source:temp"}}'-out yes echoes the HTTP response body from the Solr request, which generally isn’t any more helpful with indexing errors, but is nice to see with commands like commit and delete, even on success.

Commands, or even documents, can be piped through bin/post when -d dangles at the end of the command-line:

# Pipe a commit command

$ echo '{commit: {}}' | bin/post -c collection_name -type application/json -out yes -d

# Pipe and index a CSV file

$ cat data.csv | bin/post -c collection_name -type text/csv -d

bin/post tool is a straightforward Unix shell script that processes and validates command-line arguments and launches a Java program to do the work of posting the file(s) to the appropriate update handler end-point. Currently, SimplePostTool is the Java class used to do the work (the core of the infamous post.jar of yore). Actually post.jar still exists and is used under bin/post, but this is an implementation detail that bin/post is meant to hide.

SimplePostTool (not the bin/post wrapper script) uses the file extensions to determine the Solr end-point to use for each POST. There are three special types of files that POST to Solr’s /update end-point: .json, .csv, and .xml. All other file extensions will get posted to the URL+/extract end-point, richly parsing a wide variety of file types. If you’re indexing CSV, XML, or JSON data and the file extension doesn’t match or isn’t actually a file (if you’re using the -d option) be sure to explicitly set the -type to text/csv, application/xml, or application/json.

bin/post by sending a document to the extract handler in a debug mode returning an XHTML view of the document, metadata and all. Here’s an example, setting -params with some extra settings explained below:

$ bin/post -c test -params "extractOnly=true&wt=ruby&indent=yes" -out yes docs/SYSTEM_REQUIREMENTS.html

java -classpath /Users/erikhatcher/solr-5.3.0/dist/solr-core-5.3.0.jar -Dauto=yes -Dparams=extractOnly=true&wt=ruby&indent=yes -Dout=yes -Dc=test -Ddata=files org.apache.solr.util.SimplePostTool /Users/erikhatcher/solr-5.3.0/docs/SYSTEM_REQUIREMENTS.html

SimplePostTool version 5.0.0

Posting files to [base] url http://localhost:8983/solr/test/update?extractOnly=true&wt=ruby&indent=yes...

Entering auto mode. File endings considered are xml,json,csv,pdf,doc,docx,ppt,pptx,xls,xlsx,odt,odp,ods,ott,otp,ots,rtf,htm,html,txt,log

POSTing file SYSTEM_REQUIREMENTS.html (text/html) to [base]/extract

{

'responseHeader'=>{

'status'=>0,

'QTime'=>3},

''=>'<?xml version="1.0" encoding="UTF-8"?>

<html xmlns="http://www.w3.org/1999/xhtml">

<head>

<meta

name="stream_size" content="1100"/>

<meta name="X-Parsed-By"

content="org.apache.tika.parser.DefaultParser"/>

<meta

name="X-Parsed-By"

content="org.apache.tika.parser.html.HtmlParser"/>

<meta

name="stream_content_type" content="text/html"/>

<meta name="dc:title"

content="System Requirements"/>

<meta

name="Content-Encoding" content="UTF-8"/>

<meta name="resourceName"

content="/Users/erikhatcher/solr-5.2.0/docs/SYSTEM_REQUIREMENTS.html"/>

<meta

name="Content-Type"

content="text/html; charset=UTF-8"/>

<title>System Requirements</title>

</head>

<body>

<h1>System Requirements</h1>

...

</body>

</html>

',

'null_metadata'=>[

'stream_size',['1100'],

'X-Parsed-By',['org.apache.tika.parser.DefaultParser',

'org.apache.tika.parser.html.HtmlParser'],

'stream_content_type',['text/html'],

'dc:title',['System Requirements'],

'Content-Encoding',['UTF-8'],

'resourceName',['/Users/erikhatcher/solr-5.3.0/docs/SYSTEM_REQUIREMENTS.html'],

'title',['System Requirements'],

'Content-Type',['text/html; charset=UTF-8']]}

1 files indexed.

COMMITting Solr index changes to http://localhost:8983/solr/test/update?extractOnly=true&wt=ruby&indent=yes...

Time spent: 0:00:00.027

Setting extractOnly=true instructs the extract handler to return the structured parsed information rather than actually index the document. Setting wt=ruby (ah yes! go ahead, try it in json or xml :) and indent=yes allows the output (be sure to specify -out yes!) to render readably in a console.

bin/post makes this a real joy. Here are some examples –

$ bin/solr create -c playground

$ bin/post -c playground -type text/csv -out yes -d $'id,description,value\n1,are we there yet?,0.42'

Unix Note: that dollar-sign before the single-quoted CSV string is crucial for the new-line escaping to pass through properly. Or one could post the same data but putting the field names into a separate parameter using bin/post -c playground -type text/csv -out yes -params "fieldnames=id,description,value" -d '1,are we there yet?,0.42' avoiding the need for a new-line and the associated issue.